| Name | Modified | Size | Downloads / Week |

|---|---|---|---|

| Parent folder | |||

| README.md | 2025-07-25 | 47.6 kB | |

| v4.54.0_ Kernels, Transformers Serve, Ernie, Voxtral, LFM2, DeepSeek v2, ModernBERT Decoder... source code.tar.gz | 2025-07-25 | 18.9 MB | |

| v4.54.0_ Kernels, Transformers Serve, Ernie, Voxtral, LFM2, DeepSeek v2, ModernBERT Decoder... source code.zip | 2025-07-25 | 23.9 MB | |

| Totals: 3 Items | 42.9 MB | 1 | |

Important news!

In order to become the source of truth, we recognize that we need to address two common and long-heard critiques about transformers:

transformersis bloatedtransformersis slow

Our team has focused on improving both aspects, and we are now ready to announce this.

The modeling files for the standard Llama models are down to 500 LOC and should be much more readable, keeping just the core of the modeling and hiding the "powerful transformers features."

The MoEs are getting some kernel magic, enabling the use of the efficient megablocks kernels, setting a good precedent to allow the community to leverage any of the most powerful kernels developed for quantization as well!

It should also be much more convenient to use with any attention implementation you want. This opens the door to some optimizations such as leveraging flash-attention on Metal (MPS Torch backend).

This is but the tip of the iceberg: with the work on kernels that we're heavily pushing forward, expect speed-ups on several backends in the coming months!!

This release also includes the first steps to enabling efficient distributed training natively in transformers. Loading a 100B model takes ~3 seconds on our cluster — we hope this will be the norm for everyone! We are working on distributed checkpointing as well, and want to make sure our API can be easily used for any type of parallelism.

We want the community to benefit from all of the advances, and as always, include all hardware and platforms! We believe the kernels library will give the tools to optimize everything, making a big difference for the industry!

New models

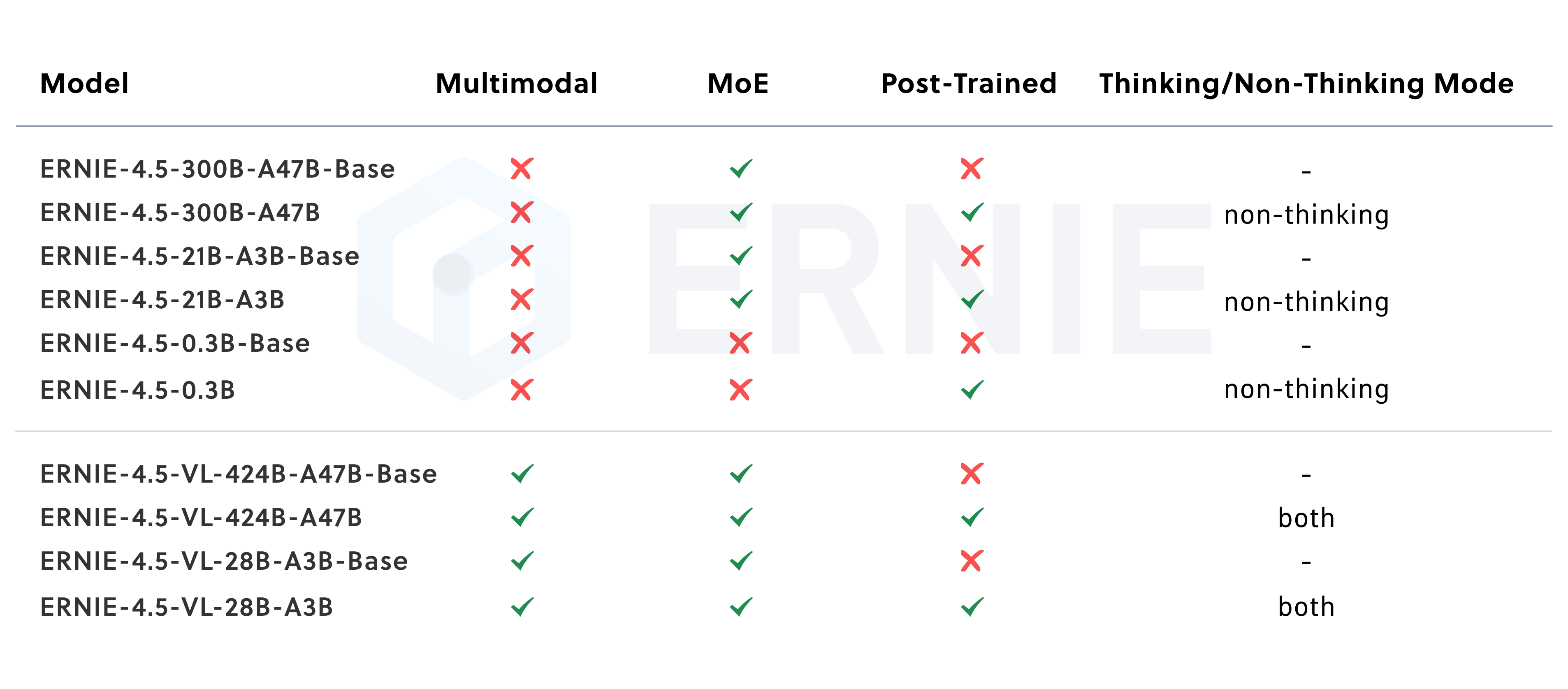

Ernie 4.5

The Ernie 4.5 model was released in the Ernie 4.5 Model Family release by baidu. This family of models contains multiple different architectures and model sizes. This model in specific targets the base text model without mixture of experts (moe) with 0.3B parameters in total. It uses the standard Llama at its core.

Other models from the family can be found at Ernie 4.5 MoE.

- [

Ernie 4.5] Add ernie text models by @vasqu in [#39228]

Voxtral

Voxtral is an upgrade of Ministral 3B and Mistral Small 3B, extending its language capabilities with audio input support. It is designed to handle tasks such as speech transcription, translation, and audio understanding.

You can read more in Mistral's realease blog post.

The model is available in two checkpoints: - 3B: mistralai/Voxtral-Mini-3B-2507 - 24B: mistralai/Voxtral-Small-24B-2507

Key Features

Voxtral builds on Ministral-3B by adding audio processing capabilities:

- Transcription mode: Includes a dedicated mode for speech transcription. By default, Voxtral detects the spoken language and transcribes it accordingly.

- Long-form context: With a 32k token context window, Voxtral can process up to 30 minutes of audio for transcription or 40 minutes for broader audio understanding.

- Integrated Q&A and summarization: Supports querying audio directly and producing structured summaries without relying on separate ASR and language models.

- Multilingual support: Automatically detects language and performs well across several widely spoken languages, including English, Spanish, French, Portuguese, Hindi, German, Dutch, and Italian.

- Function calling via voice: Can trigger functions or workflows directly from spoken input based on detected user intent.

-

Text capabilities: Maintains the strong text processing performance of its Ministral-3B foundation.

-

Add voxtral by @eustlb in [#39429]

LFM2

LFM2 represents a new generation of Liquid Foundation Models developed by Liquid AI, specifically designed for edge AI and on-device deployment.

The models are available in three sizes (350M, 700M, and 1.2B parameters) and are engineered to run efficiently on CPU, GPU, and NPU hardware, making them particularly well-suited for applications requiring low latency, offline operation, and privacy.

- LFM2 by @paulpak58 in [#39340]

DeepSeek v2

The DeepSeek-V2 model was proposed in DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model by DeepSeek-AI Team.

The model uses Multi-head Latent Attention (MLA) and DeepSeekMoE architectures for efficient inference and cost-effective training. It employs an auxiliary-loss-free strategy for load balancing and multi-token prediction training objective. The model can be used for various language tasks after being pre-trained on 14.8 trillion tokens and going through Supervised Fine-Tuning and Reinforcement Learning stages.

- Add DeepSeek V2 Model into Transformers by @VladOS95-cyber in [#36400]

ModernBERT Decoder models

ModernBERT Decoder is the same architecture as ModernBERT but trained from scratch with a causal language modeling (CLM) objective. This allows for using the same architecture for comparing encoders and decoders. This is the decoder architecture implementation of ModernBERT, designed for autoregressive text generation tasks.

Like the encoder version, ModernBERT Decoder incorporates modern architectural improvements such as rotary positional embeddings to support sequences of up to 8192 tokens, unpadding to avoid wasting compute on padding tokens, GeGLU layers, and alternating attention patterns. However, it uses causal (unidirectional) attention to enable autoregressive generation.

- Add ModernBERT Decoder Models - ModernBERT, but trained with CLM! by @orionw in [#38967]

EoMT

The Encoder-only Mask Transformer (EoMT) model was introduced in the CVPR 2025 Highlight Paper Your ViT is Secretly an Image Segmentation Model by Tommie Kerssies, Niccolò Cavagnero, Alexander Hermans, Narges Norouzi, Giuseppe Averta, Bastian Leibe, Gijs Dubbelman, and Daan de Geus. EoMT reveals Vision Transformers can perform image segmentation efficiently without task-specific components.

- ✨ Add EoMT Model || 🚨 Fix Mask2Former loss calculation by @yaswanth19 in [#37610]

Doge

Doge is a series of small language models based on the Doge architecture, aiming to combine the advantages of state-space and self-attention algorithms, calculate dynamic masks from cached value states using the zero-order hold method, and solve the problem of existing mainstream language models getting lost in context. It uses the wsd_scheduler scheduler to pre-train on the smollm-corpus, and can continue training on new datasets or add sparse activation feedforward networks from stable stage checkpoints.

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/refs%2Fpr%2F426/transformers/model_doc/doge_architecture.png" alt="drawing" width="600"

- Add Doge model by @LoserCheems in [#35891]

AIM v2

The AIMv2 model was proposed in Multimodal Autoregressive Pre-training of Large Vision Encoders by Enrico Fini, Mustafa Shukor, Xiujun Li, Philipp Dufter, Michal Klein, David Haldimann, Sai Aitharaju, Victor Guilherme Turrisi da Costa, Louis Béthune, Zhe Gan, Alexander T Toshev, Marcin Eichner, Moin Nabi, Yinfei Yang, Joshua M. Susskind, Alaaeldin El-Nouby.

The abstract from the paper is the following:

We introduce a novel method for pre-training of large-scale vision encoders. Building on recent advancements in autoregressive pre-training of vision models, we extend this framework to a multimodal setting, i.e., images and text. In this paper, we present AIMV2, a family of generalist vision encoders characterized by a straightforward pre-training process, scalability, and remarkable performance across a range of downstream tasks. This is achieved by pairing the vision encoder with a multimodal decoder that autoregressively generates raw image patches and text tokens. Our encoders excel not only in multimodal evaluations but also in vision benchmarks such as localization, grounding, and classification. Notably, our AIMV2-3B encoder achieves 89.5% accuracy on ImageNet-1k with a frozen trunk. Furthermore, AIMV2 consistently outperforms state-of-the-art contrastive models (e.g., CLIP, SigLIP) in multimodal image understanding across diverse settings.

- Add Aimv2 model by @yaswanth19 in [#36625]

PerceptionLM

The PerceptionLM model was proposed in PerceptionLM: Open-Access Data and Models for Detailed Visual Understanding by Jang Hyun Cho et al. It's a fully open, reproducible model for transparent research in image and video understanding. PLM consists of a vision encoder with a small scale (<8B parameters) LLM decoder.

- PerceptionLM by @shuminghu in [#37878]

Efficient LoFTR

The EfficientLoFTR model was proposed in Efficient LoFTR: Semi-Dense Local Feature Matching with Sparse-Like Speed by Yifan Wang, Xingyi He, Sida Peng, Dongli Tan and Xiaowei Zhou.

This model consists of matching two images together by finding pixel correspondences. It can be used to estimate the pose between them. This model is useful for tasks such as image matching, homography estimation, etc.

- Add EfficientLoFTR model by @sbucaille in [#36355]

EVOLLA

Evolla is an advanced 80-billion-parameter protein-language generative model designed to decode the molecular language of proteins. It integrates information from protein sequences, structures, and user queries to generate precise and contextually nuanced insights into protein function. Trained on an unprecedented AI-generated dataset of 546 million protein question-answer pairs and 150 billion word tokens, Evolla significantly advances research in proteomics and functional genomics, providing expert-level insights and shedding light on the molecular logic encoded in proteins.

- Add evolla rebase main by @zhoubay in [#36232]

DeepSeek VL

Deepseek-VL was introduced by the DeepSeek AI team. It is a vision-language model (VLM) designed to process both text and images for generating contextually relevant responses. The model leverages LLaMA as its text encoder, while SigLip is used for encoding images.

- Add support for DeepseekAI's DeepseekVL by @geetu040 in [#36248]

xLSTM

The xLSTM model was proposed in xLSTM: Extended Long Short-Term Memory by Maximilian Beck, Korbinian Pöppel, Markus Spanring, Andreas Auer, Oleksandra Prudnikova, Michael Kopp, Günter Klambauer, Johannes Brandstetter and Sepp Hochreiter. xLSTM updates the original LSTM architecture to be competitive with Transformer models by introducing exponential gating, matrix memory expansion, and parallelizable training and ingestion.

The 7B model variant was trained by the xLSTM team Maximilian Beck, Korbinian Pöppel, Phillip Lippe, Richard Kurle, Patrick Blies, Sebastian Böck and Sepp Hochreiter at NXAI.

- Add xlstm model by @Cyrilvallez in [#39665]

EXAONE 4.0

EXAONE 4.0 model is the language model, which integrates a Non-reasoning mode and Reasoning mode to achieve both the excellent usability of EXAONE 3.5 and the advanced reasoning abilities of EXAONE Deep. To pave the way for the agentic AI era, EXAONE 4.0 incorporates essential features such as agentic tool use, and its multilingual capabilities are extended to support Spanish in addition to English and Korean.

The EXAONE 4.0 model series consists of two sizes: a mid-size 32B model optimized for high performance, and a small-size 1.2B model designed for on-device applications.

- Add EXAONE 4.0 model by @lgai-exaone in [#39129]

Parallelisation

We've added Expert Parallel support for Llama4, next release will include it for all model! You can just set a distributed_config with enable_expert_parallel=True. This is enabling efficient training of sparse Mixture-of-Experts (MoE) models across multiple devices. This allows each expert in the MoE layer to run in parallel (instead of previous TP which requires more communication), significantly improving scalability and memory efficiency.

- Add ep by @ArthurZucker in [#39501]

Quantization

FP Quant

FP-Quant is a quantization method optimized for Blackwell-generation Nvidia GPUs, supporting efficient post-training quantization (PTQ) and quantization-aware training (QAT) of LLMs using MXFP4 and NVFP4 formats.

Currently, only PTQ with MXFP4 is available. You can quantize models on-the-fly using transformers:

from transformers import AutoModelForCausalLM, FPQuantConfig

model = AutoModelForCausalLM.from_pretrained(

"qwen/Qwen3-8B",

quantization_config=FPQuantConfig(),

device_map="cuda",

torch_dtype=torch.bfloat16,

)

FP-Quant requires a Blackwell GPU and runs via the QuTLASS library. No Blackwell GPU? Use FPQuantConfig(pseudoquant=True) to emulate quantization (no QuTLASS needed).

The following results show the inference speedup of QuTLASS MXFP4 over PyTorch BF16 in Transformers. MXFP4 gives consistent speedups across all batch sizes, reaching up to 4× faster at larger scales.

- FP-Quant support by @BlackSamorez in [#38696]

Kernels

The kernels project aims to become the single trusted source for high-performance kernels in the Transformers ecosystem. We're working toward centralizing all kernels on the Hub, so updates, bug fixes, and improvements can happen in one place—no more scattered repos and no compilation headaches!

You can already try it out today by setting use_kernels=True in from_pretrained. Any contributor can build their kernel, register it and use it right away—no extra setup, more on this here

Even better: want to use Flash Attention 3? No need to deal with tricky compilation and missing symbols issues! Just drop in:

:::python

model.set_attn_implementation("kernels-community/flash-attn3")

This automatically fetches the right build for your setup (e.g. CUDA and PyTorch versions).

We’re also teaming up with amazing kernel devs from Unsloth, Liger, vLLM, and more to bring their work directly to the Hub—making it easier than ever to access amazing performance with a single line of code.

- Kernels flash attn by @ArthurZucker in [#39474]

Transformers Serve

https://github.com/user-attachments/assets/9928f62b-543c-4b8a-b81b-4a6e262c229e

Over the past few months, we have been putting more and more functionality in the transformers chat utility, which offers a CLI-based app to chat with chat models. We've chosen to push this further by splitting the backend of transformers chat in a new, separate utility called transformers serve.

This is ideal for experimentation purposes, or to run models locally for personal and private use. It does not aim to compete with dedicated inference engines such as vLLM or SGLang.

Models of diverse modalities supported by transformers may be served with the transformers serve CLI. It spawns a local server that offers compatibility with the OpenAI SDK, which is the de-facto standard for LLM conversations and other related tasks. This way, you can use the server from many third party applications, or test it using the transformers chat CLI (docs).

The server supports the following REST APIs:

/v1/chat/completions/v1/responses/v1/audio/transcriptions/v1/models

Relevant commits:

- Split

transformers chatandtransformers serveby @LysandreJik in [#38443] - [serve] Cursor support, move docs into separate page, add more examples by @gante in [#39133]

- Fix continuous batching in

transformers serveby @LysandreJik in [#39149] - [server] add tests and fix passing a custom

generation_configby @gante in [#39230] - [serve] Model name or path should be required by @LysandreJik in [#39178]

- Random serve fixes by @pcuenca in [#39176]

- [tests] tag serve tests as slow by @gante in [#39343]

- Responses API in

transformers serveby @LysandreJik in [#39155] - [serve] Add speech to text (

/v1/audio/transcriptions) by @gante in [#39434] - Transformers serve VLM by @LysandreJik in [#39454]

Refactors

Significant refactors have been underway in transformers, aiming to reduce the complexity of the code. A metric we follow to see how the refactors impact our code is to follow the number of lines in a given model; we try to reduce it as much as possible, while keeping everything related to the forward pass and model definition in that file.

See the evolution here:

Some notable refactors:

KV caching

KV caches are now defined per layer, enabling new hybrid caches that mix different attention types. CacheProcessors also encapsulate cache quantization and offloading, making them easy to customize.

- [cache refactor] Move all the caching logic to a per-layer approach by @manueldeprada in [#39106]

Handling specific attributes like output_attentions or output_hidden_states

Such attributes require very specific handling within the forward call, while they're not important to understand how the model works. We remove that code but keep the functionality by providing a better utility to handle it.

- Refactor the way we handle outputs for new llamas and new models by @ArthurZucker in [#39120]

Setting the attention implementation

We refactor the way to explicitly set the attention implementation so that it has a method dedicated to it.

- [refactor] set attention implementation by @zucchini-nlp in [#38974]

Breaking changes

- [Whisper] 🚨 Fix pipeline word timestamp: timestamp token is end of token time !!! by @eustlb in [#36632]

- 🚨 Don't use cache in non-generative models by @zucchini-nlp in [#38751]

- 🚨🚨🚨 [eomt] make EoMT compatible with pipeline by @yaswanth19 in [#39122]

- 🚨🚨 Fix and simplify attention implementation dispatch and subconfigs handling by @Cyrilvallez in [#39423]

- 🚨🚨🚨 [Trainer] Enable

average_tokens_across_devicesby default inTrainingArgumentsby @Krish0909 in [#39395] - 🔴 Fix EnCodec internals and integration tests by @ebezzam in [#39431]

Bugfixes and improvements

- Add StableAdamW Optimizer by @SunMarc in [#39446]

- [

Flex Attn] Fix torch 2.5.1 incompatibilities by @vasqu in [#37406] - fix

test_compare_unprocessed_logit_scoresby @ydshieh in [#39053] - fix

t5gemmatests by @ydshieh in [#39052] - Update SuperPoint model card by @sbucaille in [#38896]

- fix

layoutlmv3tests by @ydshieh in [#39050] - [docs] Model contribution by @stevhliu in [#38995]

- Update PEGASUS-X model card by @dross20 in [#38971]

- [docs] @auto_docstring by @stevhliu in [#39011]

- [docs] Tensor parallelism by @stevhliu in [#38241]

- [Whisper] fix shape mismatch in tests by @eustlb in [#39074]

- Cleanup Attention class for Siglip and dependent models by @yaswanth19 in [#39040]

- fix

Gemma3nProcessorTestby @ydshieh in [#39068] - Fix initialization of OneFormer by @bvantuan in [#38901]

- Uninstallling Flash attention from quantization docker by @MekkCyber in [#39078]

- fix a bunch of XPU UT failures on stock PyTorch 2.7 and 2.8 by @yao-matrix in [#39069]

- Pipeline: fix unnecessary warnings by @eustlb in [#35753]

- fix

mistral3tests by @ydshieh in [#38989] - fixed typo for docstring in prepare_inputs method by @JINO-ROHIT in [#39071]

- TST PEFT integration tests with pipeline generate by @BenjaminBossan in [#39086]

- add fast image processor nougat by @NahieliV in [#37661]

- Add Fast Image Processor for mobileViT by @MinJu-Ha in [#37143]

- guard torch distributed check by @tvukovic-amd in [#39057]

- fix

dots1tests by @ydshieh in [#39088] - Add Fast Image Processor for Chameleon by @farrosalferro in [#37140]

- Fix: unprotected import of tp plugin by @S1ro1 in [#39083]

- TST Fix PEFT integration test bitsandbytes config by @BenjaminBossan in [#39082]

- [fix] Add FastSpeech2ConformerWithHifiGan by @stevhliu in [#38207]

- Sandeepyadav1478/2025 06 19 deberta v2 model card update by @sandeepyadav1478 in [#38895]

- Fixes the failing test

test_is_split_into_wordsintest_pipelines_token_classification.pyby @st81 in [#39079] - skip some

test_sdpa_can_dispatch_on_flashby @ydshieh in [#39092] - fix UT failures on XPU w/ stock PyTorch 2.7 & 2.8 by @yao-matrix in [#39116]

- Fix some bug for finetune and batch infer For GLM-4.1V by @zRzRzRzRzRzRzR in [#39090]

- docs: Gemma 3n audio encoder by @RyanMullins in [#39087]

- All CI jobs with A10 by @ydshieh in [#39119]

- Licenses by @LysandreJik in [#39127]

- Fix chat by @gante in [#39128]

- Enable XPU doc by @jiqing-feng in [#38929]

- docs: correct two typos in awesome-transformers.md by @VladimirGutuev in [#39102]

- switch default xpu tp backend to pytorch built-in XCCL from pytorch 2.8 by @yao-matrix in [#39024]

- Update BigBirdPegasus model card by @dross20 in [#39104]

- [Whisper] update token timestamps tests by @eustlb in [#39126]

- Fix key mapping for VLMs by @bvantuan in [#39029]

- Several fixes for Gemma3n by @Cyrilvallez in [#39135]

- fix caching_allocator_warmup with tie weights by @jiqing-feng in [#39070]

- feat: support indivisible shards for TP model loading and TPlizing. by @kmehant in [#37220]

- [qwen2-vl] fix FA2 inference by @zucchini-nlp in [#39121]

- [typing] LlamaAttention return typehint by @ArkVex in [#38998]

- [VLMs] support passing embeds along with pixels by @zucchini-nlp in [#38467]

- [superglue] fix wrong concatenation which made batching results wrong by @sbucaille in [#38850]

- Fix missing fsdp & trainer jobs in daily CI by @ydshieh in [#39153]

- Fix: Ensure wandb logs config in offline mode by @DavidS2106 in [#38992]

- Change

@lru_cache()to@lru_cacheto match styles from [#38883]. by @rasmi in [#39093] - fix: remove undefined variable by @ybkurt in [#39146]

- update bnb ground truth by @jiqing-feng in [#39117]

- Suggest jobs to use in

run-slowby @ydshieh in [#39100] - Update expected values (after switching to A10) by @ydshieh in [#39157]

- fix

llamatests by @ydshieh in [#39161] - Add activation sparsity reference in gemma3n doc by @ChongYou in [#39160]

- fix default value of config to match checkpionts in LLaVa-OV models by @ved1beta in [#39163]

- [smolvlm] fix video inference by @zucchini-nlp in [#39147]

- Fix multimodal processor get duplicate arguments when receive kwargs for initialization by @Isotr0py in [#39125]

- Blip2 fixes by @remi-or in [#39080]

- Fix missing initializations for models created in 2024 by @bvantuan in [#38987]

- Reduce Glm4v model test size significantly by @Cyrilvallez in [#39173]

- [docs] ViTPose by @stevhliu in [#38630]

- [generate] document non-canonical beam search default behavior by @gante in [#39000]

- Update expected values (after switching to A10) - part 2 by @ydshieh in [#39165]

- Update expected values (after switching to A10) - part 3 by @ydshieh in [#39179]

- Test fixes for Aria (and some Expectation for llava_next_video) by @remi-or in [#39131]

- [glm4v] fix video inference by @zucchini-nlp in [#39174]

- when delaying optimizer creation only prepare the model by @winglian in [#39152]

- Decouple device_map='auto' and tp_plan='auto' by @SunMarc in [#38942]

- Fix many HPU failures in the CI by @IlyasMoutawwakil in [#39066]

- [

Dia] Change ckpt path in docs by @vasqu in [#39181] - Update expected values (after switching to A10) - part 4 by @ydshieh in [#39189]

- [typing] better return typehints for

from_pretrainedby @qubvel in [#39184] - Update expected values (after switching to A10) - part 5 by @ydshieh in [#39205]

- Update expected values (after switching to A10) - part 6 by @ydshieh in [#39207]

- Add packed tensor format support for flex/sdpa/eager through the mask! by @Cyrilvallez in [#39194]

- Update expected values (after switching to A10) - part 7 by @ydshieh in [#39218]

- Update expected values (after switching to A10) - part 8 - Final by @ydshieh in [#39220]

- [video processors] Support float fps for precise frame sampling by @zrohyun in [#39134]

- Expectations re-order and corrected FA3 skip by @remi-or in [#39195]

- [vjepa2] replace einsum with unsqueeze by @xenova in [#39234]

- Fix missing fast tokenizer/image_processor in whisper/qwen2.5-omni processor by @Isotr0py in [#39244]

- [modular] Follow global indexing and attribute setting, and their dependencies by @Cyrilvallez in [#39180]

- fix typo in Gemma3n notes by @davanstrien in [#39196]

- Don't send new comment if the previous one is less than 30 minutes (unless the content is changed) by @ydshieh in [#39170]

- fix bug using FSDP V1 will lead to model device not properly set by @kaixuanliu in [#39177]

- Make _compute_dynamic_ntk_parameters exportable by @xadupre in [#39171]

- [modular] Simplify logic and docstring handling by @Cyrilvallez in [#39185]

- [bugfix] fix flash attention 2 unavailable error on Ascend NPU by @FightingZhen in [#39166]

- fix

fastspeech2_conformertests by @ydshieh in [#39229] - RotaryEmbeddings change

is not None->isinstance(..., dict)by @qubvel in [#39145] - Fix patch helper by @Cyrilvallez in [#39216]

- enable xpu on kv-cache and hqq doc by @jiqing-feng in [#39246]

- adjust input and output texts for test_modeling_recurrent_gemma.py by @kaixuanliu in [#39190]

- Update tiny-agents example by @Wauplin in [#39245]

- Add Korean translation for glossary.md by @JoosunH in [#38804]

- Clarify per_device_train_batch_size scaling in TrainingArguments by @Shohail-Ismail in [#38]…

- Add

segmentation_mapssupport to MobileNetV2ImageProcessor by @simonreise in [#37312] - Simplify Mixtral and its modular children by @Cyrilvallez in [#39252]

- fix some flaky tests in

tests/generation/test_utils.pyby @ydshieh in [#39254] - Update LED model card by @dross20 in [#39233]

- Glm 4 doc by @zRzRzRzRzRzRzR in [#39247]

- fix xpu failures on PT 2.7 and 2.8 w/o IPEX and enable hqq cases on XPU by @yao-matrix in [#39187]

- Fix license text, duplicate assignment, and typo in constant names by @gudwls215 in [#39250]

- Skip

test_eager_matches sdpa generateand update an integration test for blip-like models by @ydshieh in [#39248] - remove broken block by @molbap in [#39255]

- fix(generation): stop beam search per-instance when heuristic satisfied by @guang-yng in [#38778]

- fix recompiles due to instance key, and deepcopy issues by @ArthurZucker in [#39270]

- Fix errors when use verl to train GLM4.1v model by @kaln27 in [#39199]

- [CI] fix docs by @gante in [#39273]

- [pagged-attention] fix off-by-1 error in pagged attention generation by @kashif in [#39258]

- [smollm3] add tokenizer mapping for

smollm3by @gante in [#39271] - Refactor

PretrainedConfig.__init__method to make it more explicit by @qubvel in [#39158] - fix flaky

test_generate_compile_model_forwardby @ydshieh in [#39276] - [lightglue] add support for remote code DISK keypoint detector by @sbucaille in [#39253]

- Add torchcodec in docstrings/tests for

datasets4.0 by @lhoestq in [#39156] - Update T5gemma by @bzhangGo in [#39210]

- [Tests] Update model_id in AIMv2 Tests by @yaswanth19 in [#39281]

- Fix SDPA attention precision issue in Qwen2.5-VL by @JJJYmmm in [#37363]

- [flash attn 3] bring back flags by @zucchini-nlp in [#39294]

- fix

ariatests by @ydshieh in [#39277] - skip

test_torchscript_*for now until the majority of the community ask for it by @ydshieh in [#39307] - [modular] Allow method with the same name in case of @property decorator by @Cyrilvallez in [#39308]

- [sliding window] revert and deprecate by @zucchini-nlp in [#39301]

- 🌐 [i18n-KO] Translated quark.md to Korean by @maximizemaxwell in [#39268]

- Fix consistency and a few docstrings warnings by @Cyrilvallez in [#39314]

- add

stevhliuto the list inself-comment-ci.ymlby @ydshieh in [#39315] - Updated the Model docs - for the MARIAN model by @emanrissha in [#39138]

- skip files in

src/for doctest (for now) by @ydshieh in [#39316] - docs: update LLaVA-NeXT model card by @Bpriya42 in [#38894]

- Fix typo: langauge -> language by @tomaarsen in [#39317]

- Granite speech speedups by @avihu111 in [#39197]

- Fix

max_length_qandmax_length_ktypes toflash_attn_varlen_funcby @HollowMan6 in [#37206] - enable static cache on TP model by @jiqing-feng in [#39164]

- Fix broken SAM after [#39120] by @yonigozlan in [#39289]

- Delete deprecated stuff by @zucchini-nlp in [#38838]

- fix Glm4v batch videos forward by @Kuangdd01 in [#39172]

- fix

phi3tests by @ydshieh in [#39312] - Handle DAC conversion when using weight_norm with newer PyTorch versions by @edwko in [#36393]

- [modeling][lfm2] LFM2: Remove deprecated seen_tokens by @paulpak58 in [#39342]

- [Core] [Offloading] Enable saving offloaded models with multiple shared tensor groups by @kylesayrs in [#39263]

- Add a default value for

position_idsin masking_utils by @Cyrilvallez in [#39310] - [modular] speedup check_modular_conversion with multiprocessing by @qubvel in [#37456]

- Updated Switch Transformers model card with standardized format (Issue [#36979]) by @giuseppeCoccia in [#39305]

- Fix link for testpypi by @Cyrilvallez in [#39360]

- update cb TP by @ArthurZucker in [#39361]

- fix failing

test_sdpa_can_dispatch_on_flashby @ydshieh in [#39259] - Verbose error in fix mode for utils/check_docstrings.py by @manueldeprada in [#38915]

- Remove device check in HQQ quantizer by @learning-chip in [#39299]

- Add mistral common support by @juliendenize in [#38906]

- Update Readme to Run Multiple Choice Script from Example Directory by @eromomon in [#39323]

- Updated CamemBERT model card to new standardized format by @MShaheerMalik77 in [#39227]

- fix gpt2 usage doc by @Xiang-cd in [#39351]

- Update Model Card for Encoder Decoder Model by @ParagEkbote in [#39272]

- update docker file to use latest

timm(forperception_lm) by @ydshieh in [#39380] - Fix overriding Fast Image/Video Processors instance attributes affect other instances by @yonigozlan in [#39363]

- [shieldgemma] fix checkpoint loading by @zucchini-nlp in [#39348]

- [BLIP] remove cache from Qformer by @zucchini-nlp in [#39335]

- [Qwen2.5-VL] Fix torch.finfo() TypeError for integer attention_mask_tensor by @dsnsabari in [#39333]

- Deprecate AutoModelForVision2Seq by @zucchini-nlp in [#38900]

- Fix Lfm2 and common tests by @Cyrilvallez in [#39398]

- [examples] fix do_reduce_labels argument for run_semantic_segmentation_no_trainer by @eromomon in [#39322]

- Totally rewrite how pipelines load preprocessors by @Rocketknight1 in [#38947]

- Use np.pad instead of np.lib.pad. by @rasmi in [#39346]

- [Docs] Fix typo in CustomTrainer compute_loss method and adjust loss reduction logic by @MilkClouds in [#39391]

- Update phi4_multimodal.md by @tanuj-rai in [#38830]

- [siglip] fix pooling comment by @sameerajashyam in [#39378]

- Fix typo in

/v1/modelsoutput payload by @alvarobartt in [#39414] - support loading qwen3 gguf by @44670 in [#38645]

- Ignore extra position embeddings weights for ESM by @Rocketknight1 in [#39063]

- set document_question_answering pipeline _load_tokenizer to True by @jiqing-feng in [#39411]

- Fix invalid property by @cyyever in [#39384]

- refactor: remove

set_tracer_providerandset_meter_providercalls by @McPatate in [#39422] - Fix bugs from pipeline preprocessor overhaul by @Rocketknight1 in [#39425]

- Fix bugs in pytorch example run_clm when streaming is enabled by @HRezaei in [#39286]

- Remove deprecated audio utils functions by @jiangwangyi in [#39330]

- Remove residual quantization attribute from dequantized models by @DWarez in [#39373]

- handle training summary when creating modelcard but offline mode is set by @winglian in [#37095]

- [vlm] fix loading of retrieval VLMs by @zucchini-nlp in [#39242]

- docs: update SuperGlue docs by @sbucaille in [#39406]

- docs: update LightGlue docs by @sbucaille in [#39407]

- CI workflow for performed test regressions by @ahadnagy in [#39198]

- [autodocstring] add video and audio inputs by @zucchini-nlp in [#39420]

- [Core] [Offloading] Fix saving offloaded submodules by @kylesayrs in [#39280]

- Remove double soft-max in load-balancing loss. Fixes [#39055] . by @rudolfwilliam in [#39056]

- Fixed a bug calculating cross entropy loss in

JetMoeForCausalLMby @Phoenix-Shen in [#37830] - [chat template] add a testcase for kwargs by @zucchini-nlp in [#39415]

- Fix L270 - hasattr("moe_args") returning False error by @wjdghks950 in [#38715]

- Defaults to adamw_torch_fused for Pytorch>=2.8 by @cyyever in [#37358]

- Change log level from warning to info for scheduled request logging in

ContinuousBatchProcessorby @qgallouedec in [#39372] - Add cosine_with_min_lr_schedule_with_warmup_lr_rate scheduler in Trainer by @richardodliu in [#31870]

- Fix missing definition of diff_file_url in notification service by @ahadnagy in [#39445]

- add test scanner by @molbap in [#39419]

- Remove runtime conditions for type checking by @cyyever in [#37340]

- docs: add missing numpy import to minimal example by @IliasAarab in [#39444]

- [cache] make all classes cache compatible finally by @zucchini-nlp in [#38635]

- Fix typo in generation configuration for Janus model weight conversion by @thisisiron in [#39432]

- Better typing for model.config by @qubvel in [#39132]

- [Bugfix] [Quantization] Remove unused init arg by @kylesayrs in [#39324]

- Fix processor tests by @zucchini-nlp in [#39450]

- Remove something that should have never been there by @ArthurZucker in [#38254]

- make the loss context manager easier to extend by @winglian in [#39321]

- Fixes [#39204]: add fallback if get_base_model missing by @sebastianvlad1 in [#39226]

- [

CI] Fix partially red CI by @vasqu in [#39448] - Updated Megatron conversion script for gpt2 checkpoints by @LckyLke in [#38969]

- Fix indentation bug in SmolVLM image processor causing KeyError by @Krish0909 in [#39452]

- fix cached file error when repo type is dataset by @hiyouga in [#36909]

- Improve grammar and clarity in perf_hardware.md by @ridima11 in [#39428]

- create ijepa modelcard (ref : PR [#36979] ). by @dhruvmalik007 in [#39354]

- Corrections to PR [#38642] and enhancements to Wav2Vec2Processor call and pad docstrings by @renet10 in [#38822]

- fix(pipelines): QA pipeline returns fewer than top_k results in batch mode by @yushi2006 in [#39193]

- fix max_length calculating using cu_seq_lens by @KKZ20 in [#39341]

- Fix tests due to breaking change in accelerate by @SunMarc in [#39451]

- Use newer typing notation by @cyyever in [#38934]

- fix a comment typo in utils.py by @klimarissa17 in [#39459]

- Update

GemmaIntegrationTest::test_model_2b_bf16_dolaby @ydshieh in [#39362] - Fix convert_and_export_with_cache failures for GPU models by @Stonepia in [#38976]

- Enable some ruff checks for performance and readability by @cyyever in [#39383]

- fix: ImageTextToTextPipeline handles user-defined generation_config by @peteryschneider in [#39374]

- Update integration_utils.py by @zhaiji0727 in [#39469]

- Add unified logits_to_keep support to LLMClass by @hellopahe in [#39472]

- Fix typing order by @Tavish9 in [#39467]

- [dependencies] temporary pyarrow pin by @gante in [#39496]

- Slack CI bot: set default result for non-existing artifacts by @ahadnagy in [#39499]

- [dependencies] Update

datasetspin by @gante in [#39500] - [chat template] return assistant mask in processors by @zucchini-nlp in [#38545]

- [gemma3] Fix do_convert_rgb in image processors. by @MohitIntel in [#39438]

- Fix BatchEncoding.to() for nested elements by @eginhard in [#38985]

- Add fast image processor SAM by @yonigozlan in [#39385]

- Improve @auto_docstring doc and rename

args_doc.pytoauto_docstring.pyby @yonigozlan in [#39439] - Update SAM/SAM HQ attention implementation + fix Cuda sync issues by @yonigozlan in [#39386]

- Fix placeholders replacement logic in auto_docstring by @yonigozlan in [#39433]

- [gemma3] support sequence classification task by @zucchini-nlp in [#39465]

- [qwen2 vl] fix packing with all attentions by @zucchini-nlp in [#39447]

- GLM-4 Update by @zRzRzRzRzRzRzR in [#39393]

- Fix bad tensor shape in failing Hubert test. by @ebezzam in [#39502]

- Fix the check in flex test by @Cyrilvallez in [#39548]

- Rename

_supports_flash_attn_2in examples and tests by @zucchini-nlp in [#39471] - Fix Qwen Omni integration test by @Cyrilvallez in [#39553]

- Fix pylint warnings by @cyyever in [#39477]

- Raise

TypeErrorinstead of ValueError for invalid types by @Sai-Suraj-27 in [#38660] - Fix missing initializations for models created in 2023 by @bvantuan in [#39239]

- use the enable_gqa param in torch.nn.functional.scaled_dot_product_at… by @sywangyi in [#39412]

- Fix Docstring of BarkProcessor by @st81 in [#39546]

- Refactor

MambaCachetomodeling_mamba.pyby @manueldeprada in [#38086] - fix ndim check of device_mesh for TP by @winglian in [#39538]

- [Fast image processor] refactor fast image processor glm4v by @yonigozlan in [#39490]

- 🌐 [i18n-KO] Translated

perf_infer_gpu_multi.mdto Korean by @luckyvickyricky in [#39441] - Refactor embedding input/output getter/setter by @molbap in [#39339]

- [Fast image processors] Improve handling of image-like inputs other than images (segmentation_maps) by @yonigozlan in [#39489]

- [

CI] Fix post merge ernie 4.5 by @vasqu in [#39561] - Update modernbertdecoder docs by @orionw in [#39453]

- Update OLMoE model card by @nlhmnlhmnlhm in [#39344]

- [gemma3] fix bidirectional image mask by @zucchini-nlp in [#39396]

- Bump AMD container for 2.7.1 PyTorch by @ahadnagy in [#39458]

- Fixes needed for n-d parallelism and TP by @winglian in [#39562]

- [timm_wrapper] add support for gradient checkpointing by @Yozer in [#39287]

- Add AMD test expectations to DETR model by @ahadnagy in [#39539]

- [docs] update attention implementation and cache docs by @zucchini-nlp in [#39547]

- [docs] Create page on inference servers with transformers backend by @zucchini-nlp in [#39550]

- Add AMD expectations to Mistral3 tests by @ahadnagy in [#39481]

- Add AMD GPU expectations for LLaVA tests by @ahadnagy in [#39486]

- General weight initialization scheme by @Cyrilvallez in [#39579]

- [cache refactor] Move all the caching logic to a per-layer approach by @manueldeprada in [#39106]

- Update

docs/source/ko/_toctree.ymlby @jungnerd in [#39516] - updated mistral3 model card by @cassiasamp in [#39531]

- [Paged-Attention] Handle continuous batching for repetition penalty by @kashif in [#39457]

- Torchdec RuntimeError catch by @SunMarc in [#39580]

- Fix link in "Inference server backends" doc by @hmellor in [#39589]

- [WIP] Add OneformerFastImageProcessor by @Player256 in [#38343]

- 🎯 Trackio integration by @qgallouedec in [#38814]

- Mask2former & Maskformer Fast Image Processor by @SangbumChoi in [#35685]

- Fix DynamicCache and simplify Cache classes a bit by @Cyrilvallez in [#39590]

- Generic task-specific base classes by @Cyrilvallez in [#39584]

- [Trackio] Allow single-gpu training and monitor power by @qgallouedec in [#39595]

- Rename

supports_static_cachetocan_compile_fullgraphby @zucchini-nlp in [#39505] - FP-Quant support by @BlackSamorez in [#38696]

- fix moe routing_weights by @llbdyiu66 in [#39581]

- [idefics3] fix for vLLM by @zucchini-nlp in [#39470]

- enable triton backend on awq xpu by @jiqing-feng in [#39443]

- Allow

device_meshhave multiple dim by @S1ro1 in [#38949] - Fix typos and grammar issues in documentation and code by @cluster2600 in [#39598]

- Fix important models CI by @molbap in [#39576]

- Move openai import by @ebezzam in [#39613]

- Fix DAC integration tests and checkpoint conversion. by @ebezzam in [#39313]

- Feature/standardize opt model card by @JoestarGagan in [#39568]

- standardized YOLOS model card according to template in [#36979] by @EthanV431 in [#39528]

- [Docs] Translate audio_classification.md from English to Spanish by @weezymatt in [#39513]

- Update recent processors for vLLM backend by @zucchini-nlp in [#39583]

- [efficientloftr] fix model_id in tests by @sbucaille in [#39621]

- [timm] new timm pin by @gante in [#39640]

- [Voxtral] values for A10 runners by @eustlb in [#39605]

- revert behavior of _prepare_from_posids by @winglian in [#39622]

- Add owlv2 fast processor by @lmarshall12 in [#39041]

- [attention] fix test for packed padfree masking by @zucchini-nlp in [#39582]

- Fix: explicit not none check for tensors in flash attention by @jeffrey-dot-li in [#39639]

- revert change to cu_seqlen_k and max_k when preparing from position_ids by @winglian in [#39653]

- Make pytorch examples UV-compatible by @lhoestq in [#39635]

- [docs] fix ko cache docs by @gante in [#39644]

- make fixup by @gante in [#39661]

- fix(voxtral): correct typo in apply_transcription_request by @rev2607 in [#39572]

- Rename huggingface_cli to hf by @LysandreJik in [#39630]

- 🚨[Fast Image Processor] Force Fast Image Processor for Qwen2_VL/2_5_VL + Refactor by @yonigozlan in [#39591]

- Fix ModernBERT Decoder model by @qubvel in [#39671]

- [CI] revert device in

test_export_static_cacheby @gante in [#39662] - [

Ernie 4.5] Post merge adaptations by @vasqu in [#39664] - Delete bad rebasing functions by @Cyrilvallez in [#39672]

- Fixes the BC by @ArthurZucker in [#39636]

- fix

kyutaitests by @ydshieh in [#39416] - update expected outputs for whisper after [#38778] by @ydshieh in [#39304]

- Add missing flag for CacheLayer by @Cyrilvallez in [#39678]

- Fix auto_docstring crashing when dependencies are missing by @yonigozlan in [#39564]

- fix: HWIO to OIHW by @RyanMullins in [#39200]

- Use auto_docstring for perception_lm fast image processor by @yonigozlan in [#39679]

- bad_words_ids no longer slow on mps by @DWarez in [#39556]

- Support

typing.Literalas type of tool parameters or return value by @grf53 in [#39633] - fix break for ckpt without _tp_plan by @MoyanZitto in [#39658]

- Fix tied weight test by @Cyrilvallez in [#39680]

- Add padding-free to Granite hybrid moe models by @garrett361 in [#39677]

Significant community contributions

The following contributors have made significant changes to the library over the last release:

- @sbucaille

- Update SuperPoint model card (#38896)

- [superglue] fix wrong concatenation which made batching results wrong (#38850)

- [lightglue] add support for remote code DISK keypoint detector (#39253)

- docs: update SuperGlue docs (#39406)

- docs: update LightGlue docs (#39407)

- Add EfficientLoFTR model (#36355)

- @yaswanth19

- Cleanup Attention class for Siglip and dependent models (#39040)

- ✨ Add EoMT Model || 🚨 Fix Mask2Former loss calculation (#37610)

- 🚨🚨🚨 [eomt] make EoMT compatible with pipeline (#39122)

- Add Aimv2 model (#36625)

- [Tests] Update model_id in AIMv2 Tests (#39281)

- @bvantuan

- Fix initialization of OneFormer (#38901)

- Fix key mapping for VLMs (#39029)

- Fix missing initializations for models created in 2024 (#38987)

- Fix missing initializations for models created in 2023 (#39239)

- @NahieliV

- add fast image processor nougat (#37661)

- @MinJu-Ha

- Add Fast Image Processor for mobileViT (#37143)

- @zRzRzRzRzRzRzR

- Fix some bug for finetune and batch infer For GLM-4.1V (#39090)

- Glm 4 doc (#39247)

- GLM-4 Update (#39393)

- @simonreise

- Add

segmentation_mapssupport to MobileNetV2ImageProcessor (#37312)

- Add

- @LoserCheems

- Add Doge model (#35891)

- @VladOS95-cyber

- Add DeepSeek V2 Model into Transformers (#36400)

- @paulpak58

- LFM2 (#39340)

- [modeling][lfm2] LFM2: Remove deprecated seen_tokens (#39342)

- @shuminghu

- PerceptionLM (#37878)

- @juliendenize

- Add mistral common support (#38906)

- @orionw

- Add ModernBERT Decoder Models - ModernBERT, but trained with CLM! (#38967)

- Update modernbertdecoder docs (#39453)

- @cyyever

- Fix invalid property (#39384)

- Defaults to adamw_torch_fused for Pytorch>=2.8 (#37358)

- Remove runtime conditions for type checking (#37340)

- Use newer typing notation (#38934)

- Enable some ruff checks for performance and readability (#39383)

- Fix pylint warnings (#39477)

- @jungnerd

- Update

docs/source/ko/_toctree.yml(#39516)

- Update

- @Player256

- [WIP] Add OneformerFastImageProcessor (#38343)

- @SangbumChoi

- Mask2former & Maskformer Fast Image Processor (#35685)

- @BlackSamorez

- FP-Quant support (#38696)