#1259 Optimize Document::WordCharacterClass() and CategoriseCharacter()

Document::WordCharacterClass() and CategoriseCharacter() can be optimized by using splitbins() from https://github.com/python/cpython/blob/master/Tools/unicode/makeunicodedata.py

but split index twice instead of once, result in 3 lookup tables:

# creation

indexA, indexB, shiftA = splitbins(index)

indexC, indexD, shiftC = splitbins(indexB)

# lookup for charcacter ch

maskA = (1 << shiftA) - 1

maskC = (1 << shiftC) - 1

i = (indexA[ch >> shiftA] << shiftA) + (ch & maskA)

i = (indexC[i >> shiftC] << shiftC) + (i & maskC)

value = indexD[i]

I have made CharClassify::ClassifyCharacter() at

https://github.com/zufuliu/notepad2/blob/master/scintilla/scripts/GenerateCharacterCategory.py#L311

to replace the switch block in Document::WordCharacterClass() (9K bytes for Scintilla, 10K bytes for me, because I treat CJK word as a distinct class). Which is about 180 times fast than old switch block for looking all charterer in [128, 0x10ffff] (through not a typical usage).

For CategoriseCharacter() 22K bytes is required (currently 14K bytes and average 12 lookup).

By the way, I think call

IsXidContinue()andIsXidStart()in LexPython for code page other than UTF-8 is wild.The following pieces of code is valid on Windows system with code page 932 (GBK).

This was likely contributed by a project that only supports UTF-8. The controlling property is called lexer.python.unicode.identifiers so I wouldn't expect it to work with other DBCS code pages. There could be an additional check so this is only executed for UTF-8.

How about the splitbins proposal? If it can be considered, I can adopt GenerateCharacterCategory.py in Notepad2 to generate tables for

CategoriseCharacter()(or maybe standalone table forWordCharacterClass(), with which treating CJK word character as a distinct class can be implemented).I find that, by split t2 inside splitbins(), index table for CategoriseCharacter() can reduced to 17.7 KB.

attachments are the script, and changes in CharacterCategory.cxx.

parameter type for CategoriseCharacter() is changed to unsigned to avoid one comparison.

To consider speed, I'd prefer to run CategoriseCharacter on every character in an example document that represents the sort of thing Scintilla is used for (source code and markup, not novels) so perhaps an East Asian UTF-8 HTML document such as the contents of https://www.asahi.com/ . The new code should be compared against the current code and the current code with a lookup table for 0..0xff as that would be a much simpler change.

This code seems a little unclear as it appears that 2 indices were jammed into a single CharacterCategoryTable1 variable. The changed code is more complex than that which it is replacing so needs to be presented as clearly as possible. This needs to be maintainable and debuggable by people trying to understand problems.

There should be a better explanation of what splitbins does and what it depends on. It expects that its input is organised as a sequence of equal length (power-of-two) subsequences where subsequences may be similar to other subsequences.

Generated code should be limited to data tables where possible so CategoriseCharacter should be outside the generated block and depend on the table values and some declared constants. This minimizes the chance of behaviour changing unexpectedly when a script is run and allows maintainers to more easily modify the code.

The signature of CategoriseCharacter has changed to take an

unsigned intinstead of anint. StyleContext::ch and related variablkes areint. CategoriseCharacter is called withintarguments in current lexers so this is a mismatch and differs from other APIs like IsXidContinue. Whileunsigned intorchar32_tmay have benefits changing to them has wide-ranging implications so should be avoided here.Using

chfor the Unicode input as well as 2 different indices in CategoriseCharacter does not help understanding.Wide lines fail with many tools so the tables should be line-wrapped at either 1, 8, 10, or 16 elements.

Code that is not used, such as updateCharClassifyTable should not be included.

splitbins is copied from Python's source code so is licensed under Python's license and the conditions in that license need to be followed. That probably means including copyright notices and license conditions somewhere.

https://github.com/python/cpython/blob/master/LICENSE

Added test source code.

Adding a table for [0, 0xff] would make CategoriseCharacter faster for normal (code other than plain text) usage.

The binary search method has drawback for Greek, Cyrillic and other languages

https://en.wikipedia.org/wiki/Plane_(Unicode)#Basic_Multilingual_Plane

Update test, adding test for WordCharacterClass and copyright notices for splitbins.

The MSVC compilation should use

/O2instead of/Oxas/Oxis discouraged by Microsoft. Examples include MSDNand from STL (Stephan T. Lavavej, maintainer of the MSVC standard library)

Add

/GLas this allows link-time inlining and which is most likely to benefit short simple functions like "new method".Attached a script which gets the pages which may help others.

The result.txt shows speeds which appear quite slow - which compiler are these with and are the results from a laptop or virtual machine? A shorter format specifier like

%6.0fwould make the results more readable.splitbins could be in a separate .py file to more clearly delimit the code copied from Python.

Updated, the result of GCC seems unreliable.

The time unit is us. Run from a Win10 desktop with AMD Athlon II X4 640.

Updated runTestCat.bat.

The Python license is complex and appears to need the actual license agreement text to be included in derivative works, not a reference to the license:

The build command for Clang on both macOS and Linux is

On Linux (Ubuntu 18.10 64-bit in a VM), Clang 7 appears much faster than GCC 8.2, to the extent (maximum speed-up of 8:1) that I think something is wrong. VMs are sometimes weird but Clang behaves well. The middle column here is speed-up over 'old method'.

A possible explanation for the bad GCC Linux times could be that this GCC includes mitigations for the Spectre security vulnerability which can target indirect branches like calling the CategoriseCharacterSig function in TestWordClass. Making TestWordClass a template over the function may avoid this and would remove indirect function overhead from measurements including other platforms.

Spectre

Seems no difference on Windows (or the template is wrong?), at least Spectre mitigation is not enabled by default (requires extra flags and libs for MSVC).

I'm seeing some large speed ups from the template version. It seems to fix GCC on Linux although basic 'new method' is still quite slow -- maybe there is more indirection.

On MSVC 64-bit, templating seems to remove some testing overhead on my setup. The functions being tested are so short (often 3 comparisons and an array index) that replacing an indirect call with a direct call or inlining (since indirect calls can't be inlined) could make a large difference.

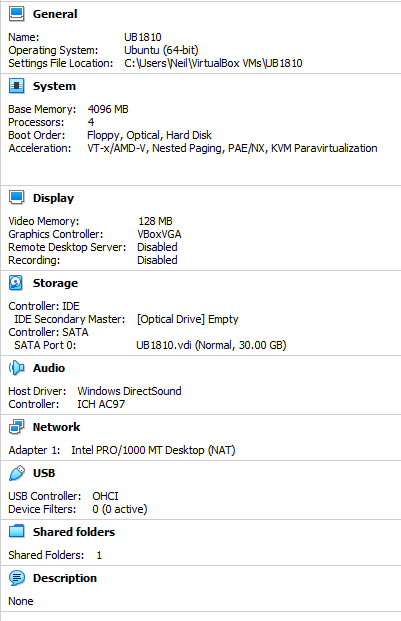

Result on Ubuntu 18.10 VirtualBox 6.0.4 (Win10 i5-4570 host).

with

-O2 -flto -fuse-linker-plugin -finline-functions:but still slow than Clang:

These results are really weird and differ greatly from my results, with an i7-6700 (Win10 host) through VirtualBox 5.2.26. I'm fairly slow at updating to new major VirtualBox versions as they can take a while to shake out bugs.

Your VM results are around 10 times slower than these and also slower than your Windows native results. Benchmarks show around a 30% advantage for the i7-6700 over the i5-4570 so the processor is nowhere near enough to produce this difference.

Your results show "new method" as around twice as fast as "latin + new" for Editor.cxx which also makes little sense to me. For Editor.cxx, every byte is ASCII so both the "latin +" cases should only run the first section of their code, indexing once into the Latin table which should always be faster than the 3 look ups and extra calculations of "new method". There is one comparison removed for "new method" over "latin + new", but that shouldn't be significant and should always be correctly predicted for Editor.cxx. About the only possibility that makes some sense is that "new method" is a preferred candidate for inlining over "latin + new".

It would also be an idea to include the bit-width of the build in the output. Something like

added bits.

Updated to VirtualBox 6.0.4 with no significant change to results. Here is my VirtualBox configuration. You seem to be missing PAE/NX although I wouldn't expect them to be a problem. Make sure you have installed the current version of the

Guest Additions.Host (desktop) and VM (with PAE/NX enabled) is update to date. output by vbox-greeter:

attached the detail compiler output (

./runTestCat.sh -vand./runTestCat.sh clang -v)Clang enabled

-vectorize-loops -vectorize-slpon O2, enable-ftree-vectorizefor GCC seems not affect speed on my VM and host.Update the test, changing

+to|.result on i5-4700 Win10 x64. Clang 9.0.0 SVN r351376 from https://llvm.org/builds/

With pre-shift for catTable2.

pre shift catTabel1 and catTable2.

result on MacBookPro (i5, Late 2011) using Clang 10 (Xcode 10.1).

correct web_cn.html

Update test, it's seems when non-ASCII characters goes to 8% and above, new method will outperform latin+old.