double compression artifacts

Double Compression Artifacts

Whenever an image is compressed multiples times, with different quality factors, with lossy schemes such as Jpeg, some artifacts may appear. If different parts of the image have different quality factors (such as in tempered images), resave this image with other quality factor, may cause those artifacts to increase unevenly throughout the image. Some of the algorithms in this project like Jpeg Ghost and Error Level Analysis help us to visualize those artifacts, helpfully reveling a tempered region.

First, A tiny bit about JPEG

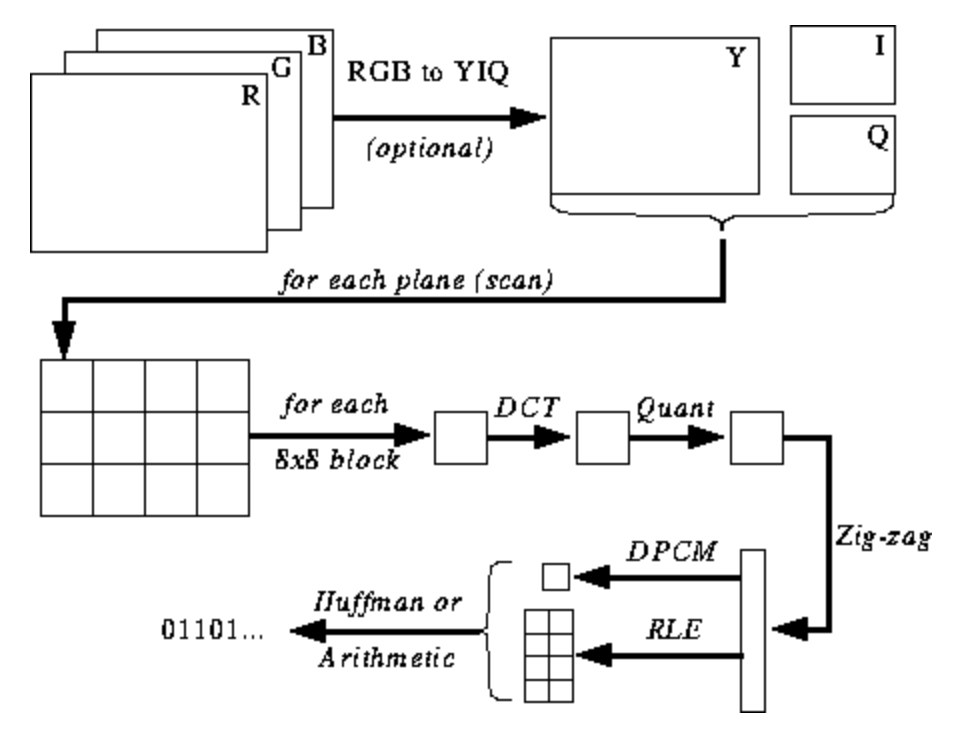

Jpeg pipeline work in (very roughly speaking) in 5 steps which are:

- Image is converted from RGB to YCbCr;

- each 8x8 grid of pixels are convert to frequency domain through DCT transform;

- Each 8x8 grid in frequency domain is quantized (meaning truncate with some predefined value);

- Image is converted to an array (zig-zag encoding)

- A Hoffman lossless compression is applied to remaining data

First the image is converted from RBG to YCbCr (Luminance and Chrominance blue and red). This is done because we perceive brightness and colors in different ways, so each channel can be compressed in a different way. This first step is not critical to understand the algorithms of artifacts detection.

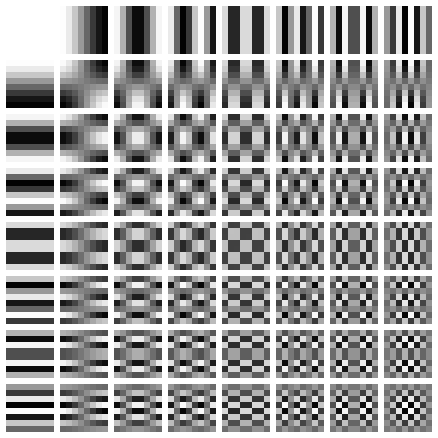

Second the image is divided in grids of 8x8 pixels size. Those grids are them transformed to Frequency domain thought DCT (discrete cosine transform). This is done because we humans perceive very little of high frequency brightness and color change. So High frequency can be discarded with very little effect to the overall image. More about DCT is found in the video bellow.

This is how an DCT matrix look like

This third step is The most critical Step to understand how algorithms that detect compression artifacts work. Each value of all 8x8 pixels grid is divided by the quantized value and round up, resulting in lots of zeros in the final grid. The following picture summarize the process. Not that the final quantized table has a lot of zeros on it

This means that the when we do the revere DCT to show the image later, the resulting image will be and approximation of the original image. Most of the values of high frequency are rounded up to zero, this is where the compression occur. In Jpeg the lower the quality, larger are the quantize values for the frequencies. Those values are somehow “arbitrary” chosen and each vendor can define their own quantization tables. In Jpeg we have 2 of such 8x8 quantization tables with fixed values. One table for Luminance, and another for chrominance. The analysis of those tables can link jpeg files direct to edit softwares (like Gimp and Coreldraw) or even direct to cameras (but this a whole other story)

In the fourth step, the image is converted to an array of data. And in the fifth step this array is compacted with a Hoffman lossless compression algorithm. All those zeros in the high frequency components of the image ensures that the final file has a smaller size than the original.

Excellent material about how jpeg works can be found at Yasoob Khalid blog. Some images from this wiki is referenced direct from Mrs. Khalid blog. I encourage you to check it out.

Artifacts

Let’s consider a DCT coefficient c1 quantized by some initial value q1. Then this DCT is further quantized by value q2 resulting in the new quantized value c2 as is (1).

The minimum difference between c1 and c2 occurs when q2 = 1 or q1 = q2 as in (2). As the difference between q1 and q2 increases, the difference between c1 and c2 also increases, as in (3).

So far so good!

This is just the normal behavior of a Jpeg compression. But let’s consider that prior to c1, there was a value c0 quantized by the value q0, that once quantized by q1 leads to c1, as in (4). Now we expects at least three values that leads to a minimum error between c0 and c2, those values are q2=1 (the trivial case), q1 = q2 (the last compression has the same quality as the previous) and finally q0 = q2. This means that, given c1 is possible to find at least one value q2 that make the error between c0 and c2 to be minimal.

This value is given the name of Jpeg Ghost by Hany Farid and is one of the main artifacts arise from double compression.

OK, but the question remains… how the jpeg ghosts help us to find tampered images?

Knowing that an inserted image in a montage or forged image will possibly not have the same initial quantization value q0 (let´s say it is a quantization value q3), the minimal error for this region of image (quantized with q3) will not be the same for the rest of the image quantized with initial value q0.

This means that is possible for algorithms such as Error Level Analysis and Jpeg Ghost to try resaving image c1 with several quality level (therefor several values of q2), until the error is minimal between the two images. to highlight those areas, revealing possible tampering.