In this HowTo I'll describe how to set up a mini server dedicated to running Elk. For researchers who do not have access to a high performance compute cluster, this may be a cost-effective way of performing computationally demanding calculations in Elk. Such a machine is also ideal for rapid code development and our group we devote an entire mini server to each of our students and post-docs for this purpose.

This should be attempted only by people who are experienced with using Linux. We accept no responsibility for any losses incurred by following this HowTo -- you do so entirely at your own risk.

Thanks to Tristan Müller and Peter Elliot for helping out with this.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

For this example, I've obtained two AMD Threadripper 2990wx desktop machines with 64 GB RAM and 32 cores each. Both computers have a fast Samsung 970 EVO Plus SSD capable of 3500 MB/s read speed. Elk uses disk reads and writes heavily, so having a fast disk drive is beneficial.

I also bought two second-hand Mellanox ConnectX-3 Infiniband cards and a QSFP+ Infiniband cable. These have a high bandwidth of about 40 Gb/s and a low latency. Used Infiniband cards give very good inter-node communication speeds at low cost. These cards come with either one or two QSFP+ ports -- both types are suitable for this build.

Infiniband also allows for direct connection of computers. Thus three machines can be connected together directly if at least one of them has a two-port infiniband card and assumes the role of the subnet manager. This can be extended to more machines but an Infiniband switch will be required. Fortunately, used Infiniband switches (such as the 8 port Mellanox IS5022) can be obtained at a very reasonable cost. A drawback of using a switch is that the cooling fans can be too loud for an office environment because switches are intended for use in a server room. However, the fans can be removed and replaced with quieter versions. It may also be possible to 'daisy-chain' more than three computers without switches but we have no experience in doing so.

Mellanox ConnectX-3 cards with two ports each, QSFP+ cables and an Infiniband switch used for this build. Note that a switch is only required for more than three computers; two or three computers can be directly connected with the cables.

(Be aware that FlexLOM Infiniband cards are not compatible with regular PCIe slots)

Label one computer as elk-001 and the other as elk-002. Plug the Infiniband cards into the PCIe slots and connect the two computers together with the QSFP+ cable.

If you have more than three computers connect them together via an Infiniband switch.

Setup for two computers, elk-001 and elk-002, using the Infiniband switch.

Disable hyperthreading in the BIOS settings of each computer. You may also be able to boost the DDR4 RAM clock above the default 2400 Mhz.

Connect both machines to the internet via their Ethernet ports.

Last edit: J. K. Dewhurst 2021-04-04

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

As these machines are to be used only for calculations, you can get a small speed boost by disabling the kernel mitigations for the Spectre and Meltdown vulnerabilities.

In the file /etc/default/grub

Set:

GRUB_CMDLINE_LINUX="mitigations=off"

Run:

sudo grub-mkconfig -o /boot/grub/grub.cfg

Do this on both machines and reboot.

Check the command line with:

cat /proc/cmdline

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Go to the Mellanox webite and find the Mellanox OpenFabrics Enterprise Distribution for Linux (MLNX_OFED). If you are using ConnectX-3 cards, you will probably need the long term support (LTS) version. Download the tarball (in this case MLNX_OFED_LINUX-4.9-2.2.4.0-ubuntu20.04-x86_64.tgz) to the packages directory.

Now unpack the tarball:

cd packagestar -xzf MLNX_OFED_LINUX-4.9-2.2.4.0-ubuntu20.04-x86_64.tgz

and run

cd MLNX_OFED_LINUX-4.9-2.2.4.0-ubuntu20.04-x86_64sudo ./mlnxofedinstall --allsudo /etc/init.d/openibd restart

This should be done on all machines (elk-001 and elk-002 in this case).

CA 'mlx4_0' CA type: MT4099 Number of ports: 2 Firmware version: 2.35.5100 Hardware version: 1 Node GUID: 0xf4521403007d79c0 System image GUID: 0xf4521403007d79c3 Port 1: State: Active Physical state: LinkUp Rate: 40 Base lid: 1 LMC: 0 SM lid: 2 Capability mask: 0x0251486a Port GUID: 0xf4521403007d79c1 Link layer: InfiniBand Port 2: State: Down Physical state: Polling Rate: 10 Base lid: 0 LMC: 0 SM lid: 0 Capability mask: 0x02514868 Port GUID: 0xf4521403007d79c2 Link layer: InfiniBand

for a two-port ConnectX-3 card. The command 'ibstatus' will give similar information.

Make sure both elk-001 and elk-002 have functioning Infiniband cards before continuing. The State should be 'Active' and the Physical state should be 'LinkUp' on both machines, which means they are correctly communicating with each other.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Append the contents of the file ~/.ssh/id_rsa.pub on elk-001 to the file ~/.ssh/authorized_keys on elk-002.

Do the same on elk-002. Append the contents of the file ~/.ssh/id_rsa.pub on elk-002 to the file

~/.ssh/authorized_keys on elk-001.

You should now be able to ssh into elk-002 without a password and vice versa. This will allow OpenMPI and the network filesystem BeeGFS to access both machines without a password.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

You will have to change the OFED_INCLUDE_PATH to correspond to the kernel running on your system. Note that updating your Linux distribution may change the kernel and this line should also be changed -- otherwise the client won't work.)

Then run:

sudo /etc/init.d/beegfs-client rebuild

In the client configuration file /etc/beegfs/beegfs-client.conf set

tuneRemoteFSync=false

and run

sudo systemctl restart beegfs-client

on elk-001 and elk-002.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Note that BeeGFS can mirror files on other nodes. This way all the machines in your cluster can store files redundantly and increase throughput. To do this see the 'Mirror Buddy Groups' topic in the BeeGFS documentation.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Bring down slow ethernet connections on elk-002 (and any other client nodes) using (in our case enp3s0):

sudo ifconfig enp3s0 down

We find that this forces OpenMPI to choose the faster IpoIB connection for setting up. You can also unplug the Ethernet cables from all nodes except elk-001.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

The instructions above are good for hardware and software from around 2020. Note that newer processors (such as the 64 core Threadripper 3990X) are already available, and used Infiniband ConnectX-4 cards are approaching more reasonable prices. Thus the build described above will have to be modified accordingly. However the basic procedure remains the same:

Connect the computers together with a fast Infiniband network

Install Linux, set up and test the Mellanox Infiniband drivers and enable IPoIB

Install a parallel file system, such as BeeGFS, with files stored on one node or mirrored/striped across all

Install a Fortran compiler (gfortran or Intel OneAPI), MKL and BLIS

Download and compile Libxc and Wannier90

Download and compile the latest version of Elk with MPI enabled

I recommend backing up the /mnt/beegfs/work directory from time to time. This mini server is built from consumer-grade parts which may fail more often than their server-grade equivalents. Elk writes heavily to disk and as the SSD is rated for a finite number of write cycles, backing up is important.

We find it sufficient to backup only the input and INFO.OUT files. Other output files can be regenerated from these just by running Elk. To do so type:

Lastly, be aware that we are constantly improving the efficiency of Elk. One way is by reducing the memory footprint of the code so that the cache on the CPU is used more than the main memory. Future versions of Elk will utilise more single-precision floating point arithmetic which requires less memory but also enables more SIMD operations per instruction. Thus you can improve your run times by simply updating to the latest version of Elk.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Dear Kay,

Thanks for your guide for building the multinodes parallel computation for ELK

I build a small server based on two nodes and run a BSE calculation for GaAs.

When using 12x12x12 kpoints, at the end of (hmldbse), I got the following error (see below).

When using 9x9x9 kpoints, the job works fine.

My environment:

Elk 7.1.14

CentOS7.8, Dell server, CPU, 8cores, 32threads, RAM. 64GB, Data exchange: 1Gb/s

openmpi-1.4.5,

run script:

mpirun -np 2 -x OMP_NUM_THREADS=32 -x OMP_PROC_BIND=false --hostfile hostfile

elk.in attached.

Any help will be appreciated.

Youzhao Lan

China

----ERROR----

Info(hmldbse): 833 of 1728 k-points Program received signal SIGSEGV: Segmentation fault - invalid memory reference.Backtrace for this error:#0 0x7f49a612d27f in ???#1 0x799fdd in ???#2 0x78af8a in ???#3 0x76f760 in ???#4 0x53390c in ???#5 0x46aa9c in ???#6 0x417d39 in ???#7 0x4035ec in ???#8 0x7f49a61193d4 in ???#9 0x40363b in ???#10 0xffffffffffffffff in ???--------------------------------------------------------------------------mpirun noticed that process rank 0 with PID 2857 on node lyzs5 exited on signal 11 (Segmentation fault).--------------------------------------------------------------------------

please also note that:

If I run the job in a single node, the 12x12x12 kpoints, the task 185 (write hmlbse) will complete normally. The HMLBSE.OUT can be written to disk normally.

Lan

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Dear Kay,

I try the following calculations by setting:

1. maxthd1

-2

2. maxthd1

-4

3. maxthd1

-2

and with -x OMP_STACKSIZE=512M

I got the same error. I notice that during the calculation RAM are occupied by <20%

and that the error takes place at the end of hmldbse.

Any other suggestion?

Best regards.

Lan

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Building a mini server for running Elk

In this HowTo I'll describe how to set up a mini server dedicated to running Elk. For researchers who do not have access to a high performance compute cluster, this may be a cost-effective way of performing computationally demanding calculations in Elk. Such a machine is also ideal for rapid code development and our group we devote an entire mini server to each of our students and post-docs for this purpose.

This should be attempted only by people who are experienced with using Linux. We accept no responsibility for any losses incurred by following this HowTo -- you do so entirely at your own risk.

Thanks to Tristan Müller and Peter Elliot for helping out with this.

Hardware

For this example, I've obtained two AMD Threadripper 2990wx desktop machines with 64 GB RAM and 32 cores each. Both computers have a fast Samsung 970 EVO Plus SSD capable of 3500 MB/s read speed. Elk uses disk reads and writes heavily, so having a fast disk drive is beneficial.

I also bought two second-hand Mellanox ConnectX-3 Infiniband cards and a QSFP+ Infiniband cable. These have a high bandwidth of about 40 Gb/s and a low latency. Used Infiniband cards give very good inter-node communication speeds at low cost. These cards come with either one or two QSFP+ ports -- both types are suitable for this build.

Infiniband also allows for direct connection of computers. Thus three machines can be connected together directly if at least one of them has a two-port infiniband card and assumes the role of the subnet manager. This can be extended to more machines but an Infiniband switch will be required. Fortunately, used Infiniband switches (such as the 8 port Mellanox IS5022) can be obtained at a very reasonable cost. A drawback of using a switch is that the cooling fans can be too loud for an office environment because switches are intended for use in a server room. However, the fans can be removed and replaced with quieter versions. It may also be possible to 'daisy-chain' more than three computers without switches but we have no experience in doing so.

Mellanox ConnectX-3 cards with two ports each, QSFP+ cables and an Infiniband switch used for this build. Note that a switch is only required for more than three computers; two or three computers can be directly connected with the cables.

(Be aware that FlexLOM Infiniband cards are not compatible with regular PCIe slots)

Label one computer as elk-001 and the other as elk-002. Plug the Infiniband cards into the PCIe slots and connect the two computers together with the QSFP+ cable.

If you have more than three computers connect them together via an Infiniband switch.

Setup for two computers, elk-001 and elk-002, using the Infiniband switch.

Disable hyperthreading in the BIOS settings of each computer. You may also be able to boost the DDR4 RAM clock above the default 2400 Mhz.

Connect both machines to the internet via their Ethernet ports.

Last edit: J. K. Dewhurst 2021-04-04

Installing the operating system

In this case I'll use Xubuntu 20.4 as the operating system. Perform a default installation on both machines and name them elk-001 and elk-002.

On both machines run

Make a directory for downloading packages:

mkdir packagesDisabling the Spectre and Meltdown patches

As these machines are to be used only for calculations, you can get a small speed boost by disabling the kernel mitigations for the Spectre and Meltdown vulnerabilities.

In the file /etc/default/grub

Set:

GRUB_CMDLINE_LINUX="mitigations=off"Run:

sudo grub-mkconfig -o /boot/grub/grub.cfgDo this on both machines and reboot.

Check the command line with:

cat /proc/cmdlineConfiguring Infiniband

Go to the Mellanox webite and find the Mellanox OpenFabrics Enterprise Distribution for Linux (MLNX_OFED). If you are using ConnectX-3 cards, you will probably need the long term support (LTS) version. Download the tarball (in this case MLNX_OFED_LINUX-4.9-2.2.4.0-ubuntu20.04-x86_64.tgz) to the packages directory.

Now unpack the tarball:

and run

This should be done on all machines (elk-001 and elk-002 in this case).

Note that we encountered an non-functioning Infiniband card which required a

manual firmware update. See here for how to do this: https://forums.servethehome.com/index.php?threads/mellanox-connectx-3-vpi-mcx354a-fcbt-hp-oem-but-with-mellanox-oem-firmware-40-usd-each.23947/

Check that Infiniband is up and running

Type

sudo ibstatYou should get something like:

for a two-port ConnectX-3 card. The command 'ibstatus' will give similar information.

Make sure both elk-001 and elk-002 have functioning Infiniband cards before continuing. The State should be 'Active' and the Physical state should be 'LinkUp' on both machines, which means they are correctly communicating with each other.

There is a problem of systemd starting the Infiniband subnet manager, opensmd, without a writable root filesystem.

(Fix thanks to https://gist.github.com/oakwhiz/742f7fdf84700496f054)

On elk-001 in the file /etc/init.d/opensmd change

# Default-Start: nullto

# Default-Start: 2 3 4 5Run:

sudo update-rc.d opensmd defaultsand reboot.

Check that the opensm daemon is loaded:

service --status-all(The subnet manager should run on elk-001 only.)

IP over Infiniband (IPoIB)

We have to enable IP communication over Infiniband.

On both machines run:

sudo apt install net-toolsRun

ifconfigto find the name of the Infiniband network interface (ibp9s0 in our case)

Run

sudo ifconfig ibp9s0 10.0.0.1/24on elk-001 and

sudo ifconfig ibp9s0 10.0.0.2/24on elk-002. Try

ssh 10.0.0.2from elk-001 and

ssh 10.0.0.1from elk-002.

Make the IP network interface permanent

First disable netplan with

On elk-001 create the file /etc/network/interfaces and add (change ibp9s0 to your interface):

Do the same file on elk-002 but with the address 10.0.0.2.

Reboot both machines. Note that the server (elk-001) should be booted first.

Exchanging public keys

On elk-001 run:

Append the contents of the file ~/.ssh/id_rsa.pub on elk-001 to the file ~/.ssh/authorized_keys on elk-002.

Do the same on elk-002. Append the contents of the file ~/.ssh/id_rsa.pub on elk-002 to the file

~/.ssh/authorized_keys on elk-001.

You should now be able to ssh into elk-002 without a password and vice versa. This will allow OpenMPI and the network filesystem BeeGFS to access both machines without a password.

Install BeeGFS

A parallel file system is a critical part of a compute cluster. We have found that BeeGFS is fast, stable and works flawlessly with Elk.

Go to the website https://www.beegfs.io/ and download and install the following Debian packages files on elk-001 (server and client):

On elk-002 ( client) install:

On both machines in /etc/beegfs/beegfs-client-autobuild.conf

buildArgs=-j8should be changed to:

buildArgs=-j8 BEEGFS_OPENTK_IBVERBS=1 OFED_INCLUDE_PATH=/usr/src/linux-headers-5.4.0-66-generic/include/You will have to change the OFED_INCLUDE_PATH to correspond to the kernel running on your system. Note that updating your Linux distribution may change the kernel and this line should also be changed -- otherwise the client won't work.)

Then run:

sudo /etc/init.d/beegfs-client rebuildIn the client configuration file /etc/beegfs/beegfs-client.conf set

tuneRemoteFSync=falseand run

sudo systemctl restart beegfs-clienton elk-001 and elk-002.

Install BeeGFS (continued)

We will use elk-001 for management, metadata, storage and as a client. On elk-001 run:

In this case, elk-002 will be used exclusively as a client. On elk-002 run:

sudo /opt/beegfs/sbin/beegfs-setup-client -m 10.0.0.1To bring up services use on server (elk-001):

On both clients (elk-001 and elk-002) use:

Remove mlocate from both machines:

sudo apt purge mlocateFilesystem speed test

We can test the file read and write speed across Infiniband on elk-002.

Local file system:

dd if=/dev/zero of=/tmp/test.img bs=1G count=1; rm /tmp/test.imgBeeGFS:

dd if=/dev/zero of=/mnt/beegfs/test.img bs=1G count=1; rm /mnt/beegfs/test.imgNote that BeeGFS can mirror files on other nodes. This way all the machines in your cluster can store files redundantly and increase throughput. To do this see the 'Mirror Buddy Groups' topic in the BeeGFS documentation.

Installing packages

On both computers run

sudo apt install gfortran intel-mkl libblis-dev openmpi-binDownload Libxc version 5.x to elk-001 and unpack. In the Libxc directory run

Download the latest version of Wannier90 to elk-001. In the Wannier90 directory run

Installing Elk

Download and unpack the latest version of elk to /mnt/beegfs/ on elk-001.

Copy the following static library and include files to the /mnt/beegfs/elk/src/ directory:

(found in the /libxc-5.x/src/.libs/ directory)

libwannier.a(found in the wannier90-3.x/ directory)

mkl_dfti.f90(found in the /usr/include/mkl/ directory)

Installing Elk (continued)

Create the following make.inc file in the /mnt/beegfs/elk/ directory:

In the elk directory run:

To enable syntax highlighting in vim, run:

make vimTo test if the code has been compiled correctly, run:

make test-allElk MPI run script

Create the file /mnt/beegfs/hosts with the entries

Create the script file /mnt/beegfs/elk_mpi with the following content:

and type

Last edit: J. K. Dewhurst 2021-04-04

Bring down slow ethernet connections on elk-002 (and any other client nodes) using (in our case enp3s0):

sudo ifconfig enp3s0 downWe find that this forces OpenMPI to choose the faster IpoIB connection for setting up. You can also unplug the Ethernet cables from all nodes except elk-001.

Running Elk

If you've made it this far with no problems, then you should now have a functioning multi-node cluster which will run Elk very efficiently.

Create a working directory:

and make a subdirectory in work/ for your input files.

To run Elk, go to the subdirectory and type

The 'nohup' command will keep the job running even if you log out.

Run

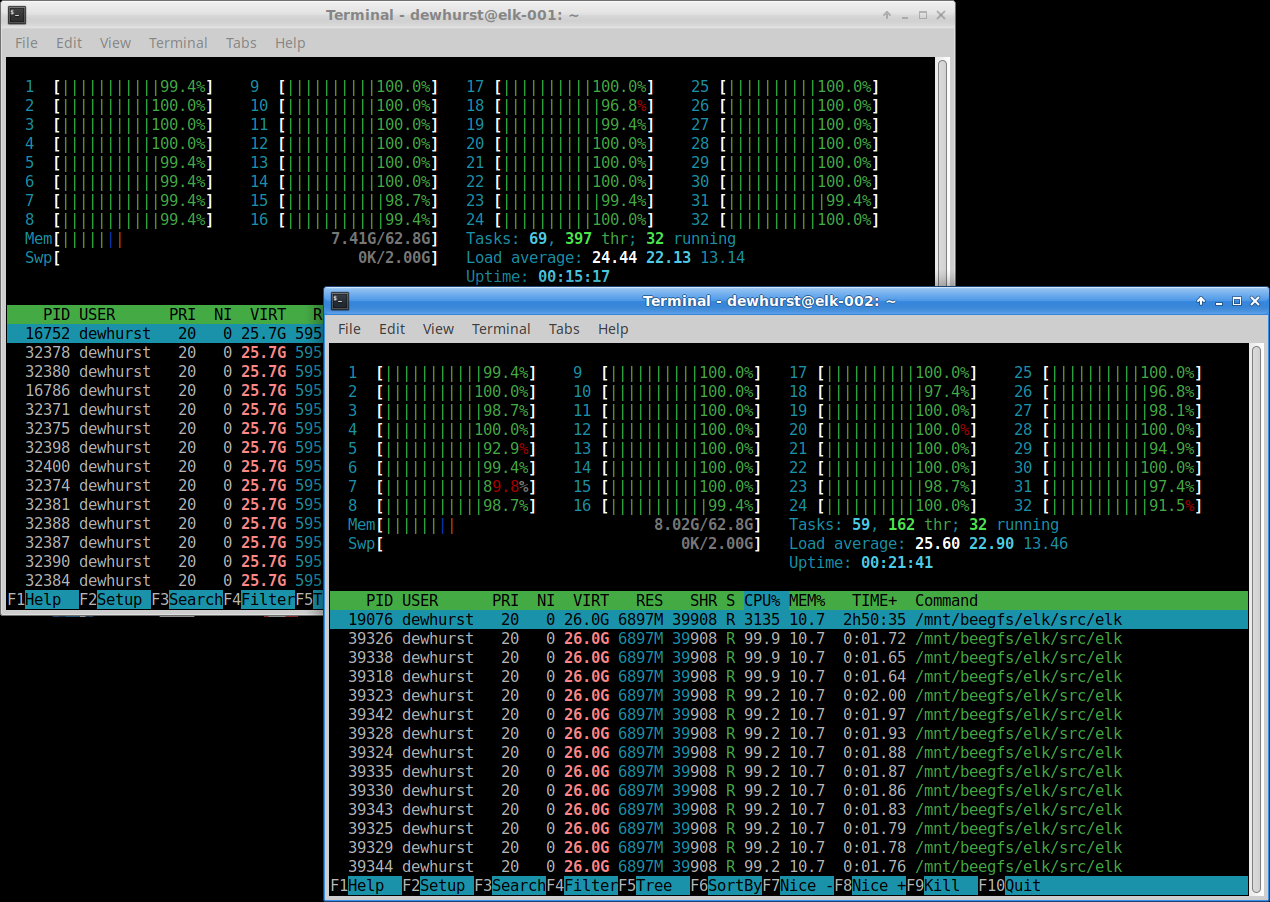

on both machines and you should see something like this:

...indicating that both machines are using all cores at close to 100%.

Last edit: J. K. Dewhurst 2021-04-04

Final notes

The instructions above are good for hardware and software from around 2020. Note that newer processors (such as the 64 core Threadripper 3990X) are already available, and used Infiniband ConnectX-4 cards are approaching more reasonable prices. Thus the build described above will have to be modified accordingly. However the basic procedure remains the same:

I recommend backing up the /mnt/beegfs/work directory from time to time. This mini server is built from consumer-grade parts which may fail more often than their server-grade equivalents. Elk writes heavily to disk and as the SSD is rated for a finite number of write cycles, backing up is important.

We find it sufficient to backup only the input and INFO.OUT files. Other output files can be regenerated from these just by running Elk. To do so type:

find /mnt/beegfs/work/ -name "*.in" -o -name "INFO.OUT" | tar -czf work.tgz -T -...and store work.tgz on a different machine.

Lastly, be aware that we are constantly improving the efficiency of Elk. One way is by reducing the memory footprint of the code so that the cache on the CPU is used more than the main memory. Future versions of Elk will utilise more single-precision floating point arithmetic which requires less memory but also enables more SIMD operations per instruction. Thus you can improve your run times by simply updating to the latest version of Elk.

Dear Kay,

Thanks for your guide for building the multinodes parallel computation for ELK

I build a small server based on two nodes and run a BSE calculation for GaAs.

When using 12x12x12 kpoints, at the end of (hmldbse), I got the following error (see below).

When using 9x9x9 kpoints, the job works fine.

My environment:

Elk 7.1.14

CentOS7.8, Dell server, CPU, 8cores, 32threads, RAM. 64GB, Data exchange: 1Gb/s

openmpi-1.4.5,

run script:

mpirun -np 2 -x OMP_NUM_THREADS=32 -x OMP_PROC_BIND=false --hostfile hostfile

elk.in attached.

Any help will be appreciated.

Youzhao Lan

China

----ERROR----

~~~

please also note that:

If I run the job in a single node, the 12x12x12 kpoints, the task 185 (write hmlbse) will complete normally. The HMLBSE.OUT can be written to disk normally.

Lan

Dear Lan,

I suspect you may be running out of memory. Try reducing the number of OpenMP threads at the first nesting level by a factor of 4 with:

and see if that helps.

Regards,

Kay.

Dear Kay,

I try the following calculations by setting:

1. maxthd1

-2

2. maxthd1

-4

3. maxthd1

-2

and with -x OMP_STACKSIZE=512M

I got the same error. I notice that during the calculation RAM are occupied by <20%

and that the error takes place at the end of hmldbse.

Any other suggestion?

Best regards.

Lan

Dear Lan,

Perhaps it's the regular stack space being exhausted. Could you try:

~~~

ulimit -Ss unlimited

~~~

In the meantime, I'll try running BSE on my mini-sever.

Regards,

Kay.