We are using clonezilla for the production of our laptops.

We have laptops with and without an onboard nic. Because we are using Clonezilla in a production environment, all laptops are connected the same way, by using a USB-NIC. This usb-nic is the second nic (eth1).

The problem is with the dual nic configurations.

When pxe booting the laptop it first does a dhcp broadcast, this is done directly on eth1.

Then the system loads it's initrd and filesquashfs.

During the processing of these files, it reinitialise the network.

First it tries counts 5 times from 1-15, waiting for eth0 (not connected) to get an active connection.

Then it initialises eth1 and continues booting.

The wait for the system to count 5 times to 15 cost us a lot of waiting time.

During the summertime we produce around 100.000 systems, of which a 20-30% or so are dual nic booted.

So +-25.000 times waiting for 4 times 1-15 for a not connected nic is a lot of wasted time.

I have already unpacked initrd and unsquashed filesystem.squashfs.

In initrd I couldn't find any parameters, so i continued searching in the squashfs.

There I found in /usr/sbin/mknic-nbi the variable: iretry_max_default="5"

I changed this to 1 , re-squashed the file and booted, but nothing has changed.

It would be very, very nice and appreciated if someone could tell were i can change this.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Actually it's an undocumented parameter for live-boot.

In initrd you should be able to find ethdevice-timeout from the files 9990-cmdline-old and 9990-networking.sh located under the path usr/lib/live/boot/.

So for your PXE clients, you can assign "ethdevice-timeout=5" in the pxe config, i.e., /tftpboot/nbi_img/grub/grub.cfg or /tftpboot/nbi_img/pxelinux.cfg/default after you start Clonezilla lite server.

However, if you are sure your usb-nic will always be eth1, then it would be easier to assign another undocumented parameter "ethdevice=eth1" in the PXE config file.

Please let us know your results.

Ref: https://clonezilla.org//fine-print-live-doc.php?path=./clonezilla-live/doc/99_Misc/00_live-boot-parameters.doc#00_live-boot-parameters.doc

Last edit: Steven Shiau 2025-05-15

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

I have tried all your provided solutions and I am sorry to say that they didn't do what i needed.

Maybe I did something wrong, or I didn't explain the problem right.

Using ehtdevice-timeout=5

When there is no directly dhcp request, it is retrying for 5 instead of 30 seconds.

But the problem is before the dhcp request, it is waiting for the line to go up on eth0, but

that's not happing because the line active nic is eth1.

ethdevice=eth1 or livenetdev=eth1

This works when the active nic is eth1, then the problem of waiting for eth0 to get an active

line isn't there. When booting a system with only one nic (eth0) the system does

boot, eth1 is not available in this case.

But when booting from eth0 while there is a eth1 available on the system, it waits for eth1

to get an active line. So this is a workaround is not really what we want. We loose a lot of flexibility in the boot environment.

Some of our servers, which we also provide with an image using Clonezilla have multiple nics

and not always will eth1 be the booted nic.

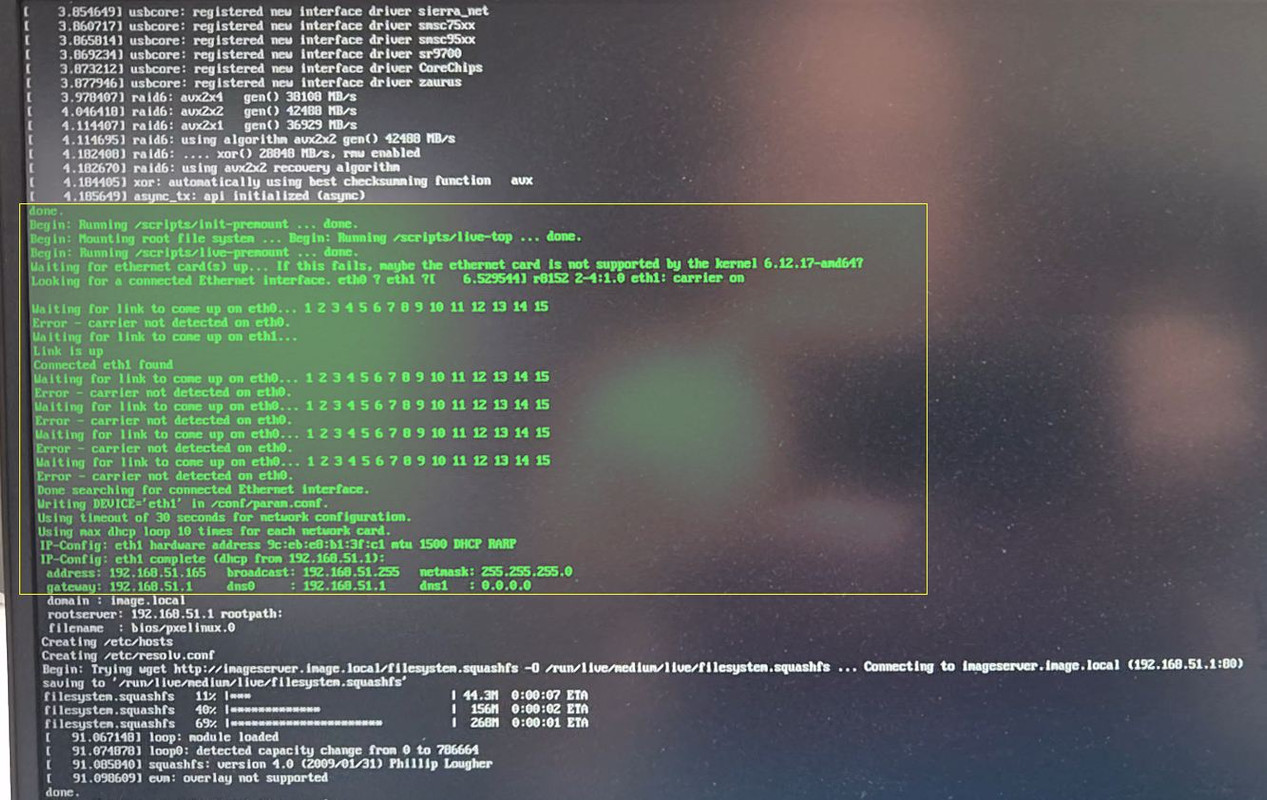

i have made a video, (which can be downloaded for a week) here

and also a picture of the problem (ripped out of the video).

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

The problem resides in /usr/lib/live/boot/9990-select-eth-device.sh.

Wait_for_carrier() is responsible for looping 15 times waiting for a link for each network device. Then ~75% down the script, the "for step" loop does it 5 times. I'm not sure what the rationale is for these very conservative settings but I agree it's too long. The loops are hardcoded and not user-adjustable at runtime. A pain when I have 4 network ports where only 1 is linked up. In the past I've had 6 ports on a system.

Clonezilla 3.0.1-8 doesn't have this issue.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Instead of using mkinitramfs, manually create it. The main fs is compressed with xz.

EDIT:

I was totally wrong about what I wrote below the horizontal line below. I did some more searching and it turns out the initrd.img has more than kernel modules. It's just Ubuntu 24.04's cpio stops the list after it encounters "." within the archive.

lsinitramfs shows everything, which includes /usr/lib/live/boot. Use unmkinitramfs to extract, modify, then mkinitramfs to recreate. Looks like the cpio archive isn't standard or there are some additional markers that Linux decided to use. For example unmkinitramfs extracts the kernel modules in an an "early" directory while the rest of the rootFS reside in a "main" directory.

No, it won't.

The squashfs is loaded after the network interfaces are brought up. I don't have any experience creating live CDs and the like but there appears to be a small rootFS embedded within vmlinuz (issue "file vmlinuz" and it will state not only a boot executable but also a rootFS and swap) and that's what gets used when the kernel runs. initrd.img only has kernel modules, nothing else. I'm not familiar with how a vmlinuz gets created or if there are utilities that allow each component to be extracted and recombined. /usr/sbin/ocs-iso creates the ISO. There are a bunch of programs in /usr/sbin that seem to help out (create, drbl, and of course ocs*). Maybe knowing how update-initramfs works might help.

I feel the easiest way is to follow https://clonezilla.org/create_clonezilla_live_from_scratch.php and create a new ISO after making the changes you want. That webpage specifically mentions installing a certain version of live-build. I'm not sure the reason why though as that package was released very close to when 2.6.6-15 was. Maybe it was for those who want to follow the procedure specifically for 2.6.6-15 in the future and it requires an old version of live-build.

Last edit: Ken 2025-08-19

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

With that, now you can assign the secs you want to wait after your lite server is started, i.e., edit the files:

/tftpboot/nbi_img/grub/grub.cfg

and

/tftpboot/nbi_img/pxelinux.cfg/default

Change "ethdevice-link-timeout=7" to what you like, e.g., ""ethdevice-link-timeout=3" for waiting for 3 secs.

Thanks Steven. I was confused looking at Clonezilla source but couldn't find things in /usr/lib/live/boot, only able to find https://github.com/grml/grml-live-grml on Github.

Please also consider adding a boot parameter for the loop in Select_eth_device(). I made my changes as follows, and pass ethupwait=3 ethloop=1. I'm not home so can't paste the output from /var/log/clonezilla/ but will post a reply after I get home.

--- usr/lib/live/boot/9990-select-eth-device.sh 2025-03-01 04:05:57.000000000 -0800+++ /var/lib/tftpboot/sw/clonezilla.3.2.2-15/temp/main/usr/lib/live/boot/9990-select-eth-device.sh 2025-08-19 03:58:17.972235027 -0700@@ -2,10 +2,20 @@Wait_for_carrier ()

{

+ ETHUPWAIT=15+ for ARGUMENT in ${LIVE_BOOT_CMDLINE}+ do+ case "${ARGUMENT}" in+ ethupwait=*)+ ETHUPWAIT="${ARGUMENT#ethupwait=}"+ ;;+ esac+ done+ # $1 = network device

echo -n "Waiting for link to come up on $1... "

ip link set $1 up

- for step in $(seq 1 15)+ for step in $(seq 1 ${ETHUPWAIT}) do

carrier=$(cat /sys/class/net/$1/carrier \

2>/dev/null)

@@ -122,7 +132,17 @@ echo ''

- for step in 1 2 3 4 5+ ETHLOOP=5+ for ARGUMENT in ${LIVE_BOOT_CMDLINE}+ do+ case "${ARGUMENT}" in+ ethloop=*)+ ETHLOOP="${ARGUMENT#ethloop=}"+ ;;+ esac+ done++ for step in $(seq 1 ${ETHLOOP}) do

for interface in $l_interfaces

do

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

No, but here's how my patched initrd.img works. If all you patched was Wait_for_carrier and not Select_eth_device() the wait for link will be repeated 5 times.

"When will this change get deployed into the stable version?" -> Not very sure. However, we definitely will have another stable one in late Oct.

If Clonezilla live 3.3.0-17 works for you, you can stick to that version.

If you would like to refer to this comment somewhere else in this project, copy and paste the following link:

Hi all,

We are using clonezilla for the production of our laptops.

We have laptops with and without an onboard nic. Because we are using Clonezilla in a production environment, all laptops are connected the same way, by using a USB-NIC. This usb-nic is the second nic (eth1).

The problem is with the dual nic configurations.

When pxe booting the laptop it first does a dhcp broadcast, this is done directly on eth1.

Then the system loads it's initrd and filesquashfs.

During the processing of these files, it reinitialise the network.

First it tries counts 5 times from 1-15, waiting for eth0 (not connected) to get an active connection.

Then it initialises eth1 and continues booting.

The wait for the system to count 5 times to 15 cost us a lot of waiting time.

During the summertime we produce around 100.000 systems, of which a 20-30% or so are dual nic booted.

So +-25.000 times waiting for 4 times 1-15 for a not connected nic is a lot of wasted time.

I have already unpacked initrd and unsquashed filesystem.squashfs.

In initrd I couldn't find any parameters, so i continued searching in the squashfs.

There I found in /usr/sbin/mknic-nbi the variable: iretry_max_default="5"

I changed this to 1 , re-squashed the file and booted, but nothing has changed.

It would be very, very nice and appreciated if someone could tell were i can change this.

Actually it's an undocumented parameter for live-boot.

In initrd you should be able to find ethdevice-timeout from the files 9990-cmdline-old and 9990-networking.sh located under the path usr/lib/live/boot/.

So for your PXE clients, you can assign "ethdevice-timeout=5" in the pxe config, i.e., /tftpboot/nbi_img/grub/grub.cfg or /tftpboot/nbi_img/pxelinux.cfg/default after you start Clonezilla lite server.

However, if you are sure your usb-nic will always be eth1, then it would be easier to assign another undocumented parameter "ethdevice=eth1" in the PXE config file.

Please let us know your results.

Ref: https://clonezilla.org//fine-print-live-doc.php?path=./clonezilla-live/doc/99_Misc/00_live-boot-parameters.doc#00_live-boot-parameters.doc

Last edit: Steven Shiau 2025-05-15

BTW, we will update the doc to mention these two parameters: ethdevice and ethdevice-timeout

Hi Steven,

I have tried all your provided solutions and I am sorry to say that they didn't do what i needed.

Maybe I did something wrong, or I didn't explain the problem right.

Using ehtdevice-timeout=5

When there is no directly dhcp request, it is retrying for 5 instead of 30 seconds.

But the problem is before the dhcp request, it is waiting for the line to go up on eth0, but

that's not happing because the line active nic is eth1.

ethdevice=eth1 or livenetdev=eth1

This works when the active nic is eth1, then the problem of waiting for eth0 to get an active

line isn't there. When booting a system with only one nic (eth0) the system does

boot, eth1 is not available in this case.

But when booting from eth0 while there is a eth1 available on the system, it waits for eth1

to get an active line. So this is a workaround is not really what we want. We loose a lot of flexibility in the boot environment.

Some of our servers, which we also provide with an image using Clonezilla have multiple nics

and not always will eth1 be the booted nic.

i have made a video, (which can be downloaded for a week) here

and also a picture of the problem (ripped out of the video).

The problem resides in /usr/lib/live/boot/9990-select-eth-device.sh.

Wait_for_carrier() is responsible for looping 15 times waiting for a link for each network device. Then ~75% down the script, the "for step" loop does it 5 times. I'm not sure what the rationale is for these very conservative settings but I agree it's too long. The loops are hardcoded and not user-adjustable at runtime. A pain when I have 4 network ports where only 1 is linked up. In the past I've had 6 ports on a system.

Clonezilla 3.0.1-8 doesn't have this issue.

Hi Ken,

Thanks for your insight.

If I change this in the code and compress it back to a squashfs, does this work?

Or is there another way required to make a change for this?

EDIT 2:

Instead of using mkinitramfs, manually create it. The main fs is compressed with xz.

EDIT:

I was totally wrong about what I wrote below the horizontal line below. I did some more searching and it turns out the initrd.img has more than kernel modules. It's just Ubuntu 24.04's cpio stops the list after it encounters "." within the archive.

lsinitramfs shows everything, which includes /usr/lib/live/boot. Use unmkinitramfs to extract, modify, then mkinitramfs to recreate. Looks like the cpio archive isn't standard or there are some additional markers that Linux decided to use. For example unmkinitramfs extracts the kernel modules in an an "early" directory while the rest of the rootFS reside in a "main" directory.

No, it won't.

The squashfs is loaded after the network interfaces are brought up. I don't have any experience creating live CDs and the like but there appears to be a small rootFS embedded within vmlinuz (issue "file vmlinuz" and it will state not only a boot executable but also a rootFS and swap) and that's what gets used when the kernel runs. initrd.img only has kernel modules, nothing else. I'm not familiar with how a vmlinuz gets created or if there are utilities that allow each component to be extracted and recombined. /usr/sbin/ocs-iso creates the ISO. There are a bunch of programs in /usr/sbin that seem to help out (create, drbl, and of course ocs*). Maybe knowing how update-initramfs works might help.

I feel the easiest way is to follow https://clonezilla.org/create_clonezilla_live_from_scratch.php and create a new ISO after making the changes you want. That webpage specifically mentions installing a certain version of live-build. I'm not sure the reason why though as that package was released very close to when 2.6.6-15 was. Maybe it was for those who want to follow the procedure specifically for 2.6.6-15 in the future and it requires an old version of live-build.

Last edit: Ken 2025-08-19

@Francois,

Somehow I missed your reply on Jun/10. I thought your issue was about time out in DHCP. Yesterday I realized your issue is actually on the NIC link detection time out. As Ken mentioned, that is a fixed number (15 secs) in the live-boot:

https://salsa.debian.org/live-team/live-boot/-/blob/master/components/9990-select-eth-device.sh?ref_type=heads

We have patched the live-boot to have an extra boot parameter "ethdevice-link-timeout"in Clonezilla live >=3.3.0-6 or 20250820-*:

https://clonezilla.org///downloads.php

With that, now you can assign the secs you want to wait after your lite server is started, i.e., edit the files:

/tftpboot/nbi_img/grub/grub.cfg

and

/tftpboot/nbi_img/pxelinux.cfg/default

Change "ethdevice-link-timeout=7" to what you like, e.g., ""ethdevice-link-timeout=3" for waiting for 3 secs.

For more info, please check:

https://clonezilla.org//downloads/testing/changelog.php

and

https://clonezilla.org///fine-print-live-doc.php?path=./clonezilla-live/doc/99_Misc/00_live-boot-parameters.doc#00_live-boot-parameters.doc

Please let us know the results if you test that. Thanks.

Thanks Steven. I was confused looking at Clonezilla source but couldn't find things in /usr/lib/live/boot, only able to find https://github.com/grml/grml-live-grml on Github.

Please also consider adding a boot parameter for the loop in Select_eth_device(). I made my changes as follows, and pass ethupwait=3 ethloop=1. I'm not home so can't paste the output from /var/log/clonezilla/ but will post a reply after I get home.

@Ken, have you tested Clonezilla live >= 3.3.0-6 or 20250820-*?

https://clonezilla.org/downloads.php

We have implemented a similar mechanism to deal with that. The details are described in my previous post, i.e.,

https://sourceforge.net/p/clonezilla/discussion/Open_discussion/thread/579168f80f/?limit=25#c7f7

Please give it a try.

Thanks.

Last edit: Steven Shiau 2025-08-21

No, but here's how my patched initrd.img works. If all you patched was Wait_for_carrier and not Select_eth_device() the wait for link will be repeated 5 times.

Hi Ken and Steven,

I have tested the latest test version (3.3.0-17) and I am very happy with the change.

In the simulated environment with a dual nic client the boot time is reduced from 1m51 to 51 seconds. This will makes us very happy :)

When will this change get deployed into the stable version?

"When will this change get deployed into the stable version?" -> Not very sure. However, we definitely will have another stable one in late Oct.

If Clonezilla live 3.3.0-17 works for you, you can stick to that version.